Comparing Popular Methods for Evaluating Chatbot Performance

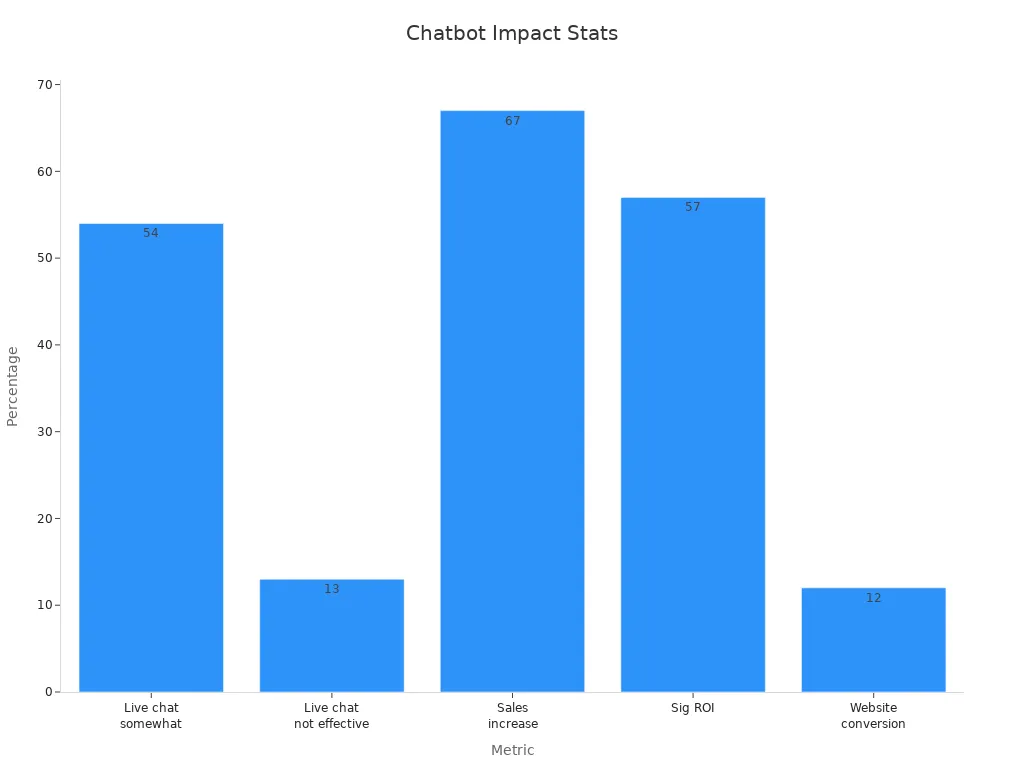

Evaluating chatbot performance shapes customer satisfaction, operational efficiency, and business outcomes across industries. Over 90% of consumers think businesses should use chatbots, while companies using chatbots report a 67% sales increase and save 2.5 billion hours yearly. The right tools and methods, such as those offered by Sobot AI, empower businesses to optimize customer interactions and drive success. The following table highlights key metrics:

| Metric Description | Statistic / Percentage | Impact Area |

|---|---|---|

| Customers finding live chat “somewhat effective” | 54% | Customer Satisfaction |

| Sales increase for businesses using chatbots | 67% | Business Outcomes (Sales) |

| Hours saved by chatbots in 2023 | 2.5 billion hours | Operational Efficiency |

| Businesses generating more high-quality leads via chatbots | Over 50% | Business Outcomes (Lead Gen) |

Businesses seeking how to evaluate chatbot performance benefit from a careful comparison of available tools and strategies.

Chatbot Performance in Customer Service

Key Metrics for Performance

Businesses use clear metrics to measure chatbot performance in customer service. These performance metrics help teams understand how well chatbots handle customer questions and improve support. Some important metrics include agent experience score, false positive rate, bot repetition rate, and positive feedback rate. These show how often chatbots give correct responses, how many times they repeat answers, and how satisfied customers feel after using the service.

| Metric Name | Description & Significance |

|---|---|

| Agent Experience Score | Measures live agent interaction quality using signals like abandonment, wait time, handle time, and sentiment. |

| False Positive Rate | Rate at which the chatbot incorrectly classifies utterances with high confidence; indicates NLU quality. |

| Bot Repetition Rate | Frequency of the bot repeating itself, indicating potential conversational design issues. |

| Positive Feedback Rate | Ratio of positive feedback to total feedback, providing a balanced view of user satisfaction. |

| NLU Rate | Measures how often the chatbot correctly matches user utterances to intents at a confidence threshold. |

| Interaction Volume | Total number of chatbot conversations in a timeframe, indicating adoption and usage. |

| Bounce Rate | Percentage of users who start but quickly abandon chatbot interaction, signaling engagement issues. |

| Conversation Length | Average number of messages or duration per conversation, reflecting engagement and query complexity. |

| Handle Time | Average time to resolve queries, assessing chatbot efficiency compared to human agents. |

| Bot Automation Score | Binary metric indicating how often the bot fully satisfies customer needs without escalation, based on negative signals. |

| Cost per Automated Conversation | Calculates ROI by considering platform costs, conversation volume, and genuine automation effectiveness. |

Sobot’s AI Chatbot uses these metrics to track and improve its responses. For example, Sobot’s chatbot can handle high interaction volumes and maintain a low bounce rate, showing strong adoption and engagement. The chatbot’s depth and breadth of responses also help increase the self-service rate, which means more customers get answers without needing a human agent.

Impact on Customer Experience

Chatbots now handle most customer interactions in many industries. Research shows that by 2023, chatbots will manage over 75% of customer service conversations (Juniper Research). Customers benefit from faster responses, shorter wait times, and 24/7 support. Studies confirm that easy-to-use chatbots boost customer satisfaction and help businesses track improvements using metrics like Net Promoter Score (NPS) and message feedback.

Companies like Sobot help businesses deliver real-time, accurate responses across channels. For example, Sobot’s chatbot supports multiple languages and provides instant answers, which improves customer satisfaction and reduces agent workload.

| Metric / Case Study | Quantitative Evidence / Impact | Sector Examples |

|---|---|---|

| Customer inquiries answered by chatbots | 60-90% of chat issues resolved by chatbots, with 10-40% escalated to human agents | Energy, Media/Entertainment, E-commerce, Customer Service |

| Increase in conversation volume | Up to 40% increase in chat volume with 24/7 chatbot support | General across sectors |

| Time savings | Varma saved 330 hours per month, enabling reassignment of two agents' responsibilities | Pension Services (Energy sector) |

| Customer satisfaction metrics | Improvements tracked via NPS, message feedback, unanswered message rates | Cross-sector applicability |

| Queue and response time improvements | Reduction in queue length and first response time, enhancing customer experience | Customer Service |

| Agent workload and satisfaction | Deflection and automation reduce repetitive queries, allowing agents to focus on complex issues | Customer Service |

| Cost savings | Amazon achieved million-dollar savings through chatbot capacity planning | E-commerce |

Sobot’s chatbot performance analysis shows that strong performance metrics lead to better customer experiences. Customers receive quick, helpful responses, and businesses see higher satisfaction scores and lower costs.

How to Evaluate Chatbot Performance

Human Evaluation Methods

Human evaluation methods help people understand how to evaluate chatbot performance in real conversations. Experts and regular users both play a role. They often use structured questions, like those based on Bloom’s Taxonomy, to test chatbot responses. Evaluators look at empathy, tone, and how well the chatbot follows conversation rules. For example, some studies use a 5-point Likert scale to rate chatbot answers for clarity, helpfulness, and correctness. Multiple evaluators, including both experts and novices, can reduce bias and improve reliability. In healthcare, professionals and patients may both score chatbot responses to ensure accuracy and trust. Using clear guidelines and scoring rubrics helps keep results consistent. These methods show how human judgment remains important in chatbot performance analysis.

Tip: Combining expert and user feedback gives a more complete picture of chatbot strengths and weaknesses.

Automated Metrics and KPIs

Automated metrics and KPIs make it easier to track how to evaluate chatbot performance at scale. These tools measure things like how often chatbots answer questions correctly, how many times they need help from a human, and how satisfied users feel. Common KPIs include customer satisfaction (CSAT), fallback rate, handoff rate, and resolution rate. For example, a high fallback rate means the chatbot does not understand many questions. Automated systems can also track engagement rate, bounce rate, and average chat duration. These performance metrics help businesses spot problems quickly and improve chatbot responses. Sobot’s AI Chatbot uses dashboards to monitor these KPIs, making it simple to see trends and set alerts for issues. Automated evaluation offers speed and consistency, but sometimes misses the deeper meaning behind user interactions.

| KPI | Description & Alert Thresholds |

|---|---|

| Customer Satisfaction | Alert if CSAT drops below 80% |

| Fallback Rate | Alert if over 15% |

| Handoff Rate | Alert if up by 20% week-over-week |

| Resolution Rate | Alert if below 60% |

| Engagement Rate | Measures user activity |

User Feedback and Surveys

User feedback and surveys are key for understanding how to evaluate chatbot performance from the customer’s point of view. After a chat, users may rate their experience or answer a short survey. These responses help companies see if the chatbot met their needs. Studies show that user feedback often matches chatbot accuracy, especially when chatbots give complete and clear answers. For example, chatbots like ZenoChat and ChatFlash scored high for correctness and completeness, which matched positive user ratings. Sobot’s chatbot collects feedback after each session, helping teams find areas to improve. While survey response rates can be low, the data still gives valuable insights into user satisfaction and chatbot reliability.

Note: User feedback highlights what matters most to customers, such as quick answers and helpful responses.

Task Success Rate

Task success rate measures how well chatbots complete the jobs they are given. This is a direct way to see how to evaluate chatbot performance in real-world situations. Important metrics include task completion rate, error rate, response time, and first contact resolution. For example, a SaaS onboarding chatbot that reduces time-to-value by 30% shows high task success. Sobot’s chatbot tracks these metrics using analytics dashboards, so businesses can see how often users get the help they need without extra steps. Monitoring task success helps companies spot gaps in chatbot responses and improve future performance. High task success rates mean chatbots are reliable and efficient for users.

| Metric | Definition and Importance |

|---|---|

| Task Completion Rate | Shows how well the chatbot finishes assigned tasks |

| Error Rate | Tracks how often the chatbot gives wrong or failed responses |

| Response Time | Measures how fast the chatbot replies |

| First Contact Resolution | Percentage of issues solved in the first interaction |

| Scalability Metrics | Checks performance under different user loads |

Comparison of Evaluation Methods

Strengths and Weaknesses

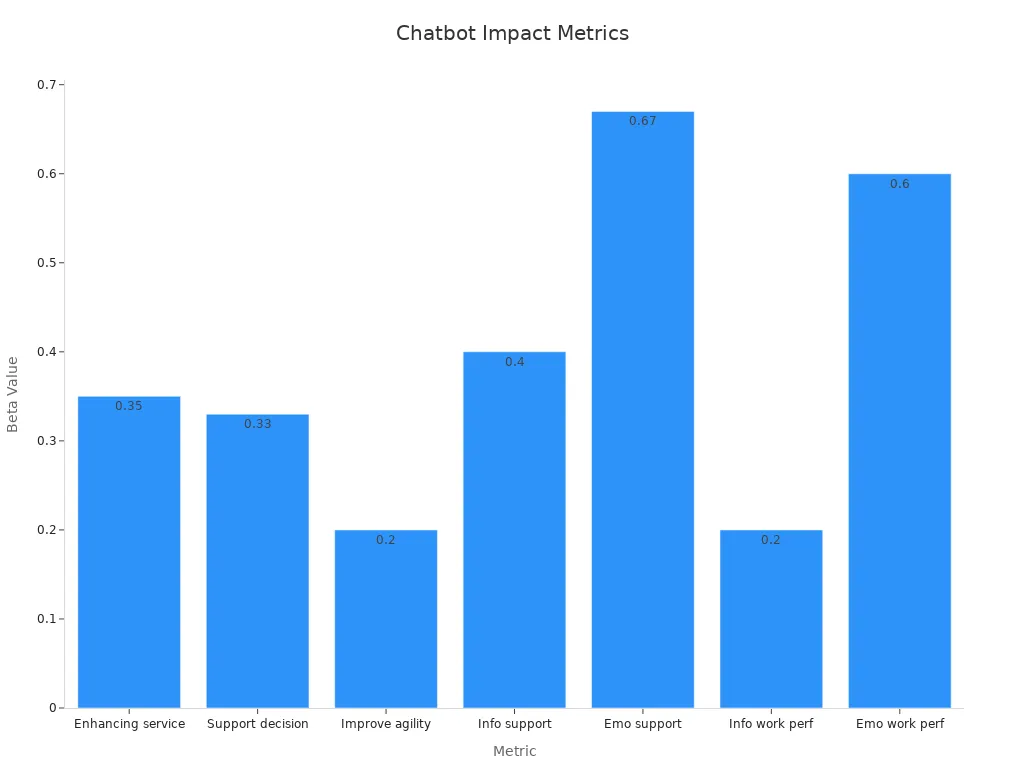

Different methods for evaluating chatbots offer unique strengths and weaknesses. Human evaluation brings deep insight into empathy, tone, and context. Automated metrics provide speed and consistency. User feedback highlights real-world satisfaction. Task success rates show how well a chatbot completes assigned jobs.

The following table summarizes findings from recent studies that compare evaluation methods across various domains:

| Study Focus | Dataset(s) Used | Evaluation Methods | Key Findings |

|---|---|---|---|

| ChatGPT vs Human Coders on Planning Documents | Climate change plans | Comparison with human coders | ChatGPT aligns well but struggles with domain-specific jargon |

| ChatGPT 4.0 on Journal Article Quality | UK Research Excellence Framework 2021 guidelines | Comparison with author self-evaluations | Automated evaluations show reasonable accuracy |

| ChatGPT on Programming Tasks | Kattis (127 programming problems) | Success rate measurement | Proficient on simple tasks, struggles with complex ones (19/127 solved) |

| ChatGPT in Face Biometrics | Public benchmarks | Comparison with state-of-the-art methods | Enhances explainability and robustness in biometric systems |

| LLMs on News Truthfulness | 100 fact-checked news items | Black box testing | Varied proficiency in truth verification |

| ChatGPT on Medical Questions | 284 questions from 33 physicians | Likert scale ratings, statistical analysis | High accuracy but limitations in complex cases |

| ChatGPT on Ophthalmology Exam Questions | BCSC and OphthoQuestions banks | Accuracy assessment | Improved performance with newer versions |

| ChatGPT on Emotional Awareness | 20 emotional scenarios | Comparison with humans using emotional awareness scale | Superior and improving performance over time |

| ChatGPT in Scientific Research | AI-generated articles and abstracts | ANOVA, thematic analysis by reviewers | High-quality content but challenges in methodology and literature review |

These studies show that automated evaluation methods work well for speed and scale. However, they may miss subtle errors or context-specific issues. Human evaluation captures nuance but takes more time and resources. User feedback provides direct insight but can be inconsistent. Task-based benchmarking helps measure real-world effectiveness.

Sobot’s AI Chatbot uses a blend of these methods. The platform tracks automated metrics, collects user feedback, and monitors task completion rates. This approach helps businesses understand both the strengths and weaknesses of their chatbot solutions.

Note: No single method covers every aspect of chatbot performance. Combining methods gives a more complete picture.

Best-Use Scenarios

Each evaluation method fits best in certain situations. Businesses should choose based on their goals, resources, and the complexity of their chatbots.

- Peer-reviewed studies ensure reliable results. They help when accuracy and rigor matter most.

- Automated metrics work well for large-scale monitoring. They suit businesses with high chat volume.

- Human evaluation is best for new chatbot launches or when testing empathy and tone.

- User feedback and surveys help when customer satisfaction is a top priority.

- Mixed-methods case studies are useful in complex settings, such as healthcare or retail, where both numbers and stories matter.

For example, Sobot’s clients in retail and e-commerce often use automated metrics to track thousands of daily interactions. In contrast, healthcare providers may rely on human evaluation and mixed-methods to ensure safety and trust. A cancer care program evaluation found that while quantitative data showed no significant change, interviews revealed important process improvements. This shows that mixed-methods can uncover benefits that numbers alone might miss.

Tip: Match the evaluation method to your business needs and the complexity of your chatbot.

Scalability and Efficiency

Scalability and efficiency matter when businesses deploy chatbots across many channels or handle high interaction volumes. Automated metrics and benchmarking tools allow companies to track performance quickly and at scale. These methods help identify trends, spot issues, and optimize chatbot responses without manual effort.

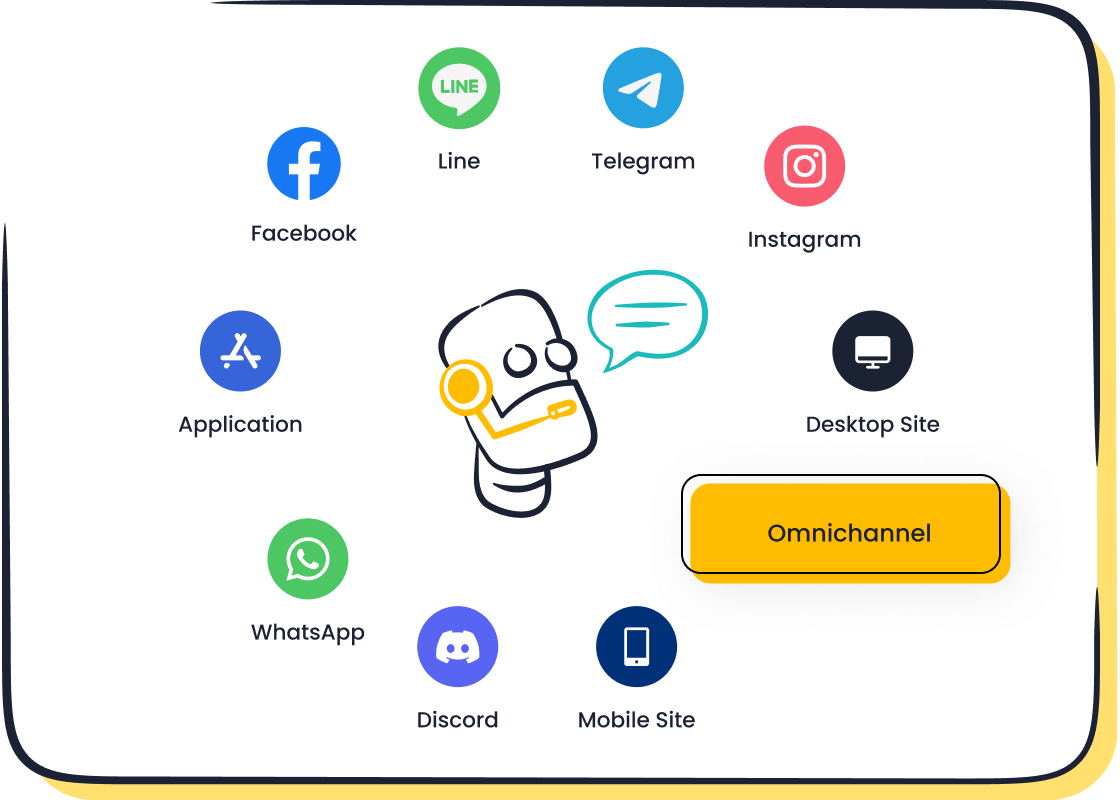

For instance, Sobot’s AI Chatbot supports omnichannel interactions and provides real-time analytics dashboards. This enables businesses to monitor thousands of conversations daily. Automated benchmarking helps compare chatbot performance over time and across different business units.

Human evaluation and user surveys, while valuable, may not scale as easily. They require more time and resources. However, they remain important for periodic deep dives or when launching new features.

Businesses should use automated methods for ongoing monitoring and reserve human evaluation for targeted reviews or critical updates.

By combining scalable automated tools with periodic human checks, companies can ensure their chatbots deliver consistent, high-quality service as they grow.

AI Chatbots Comparison: Tools and Platforms

Sobot Chatbot Overview

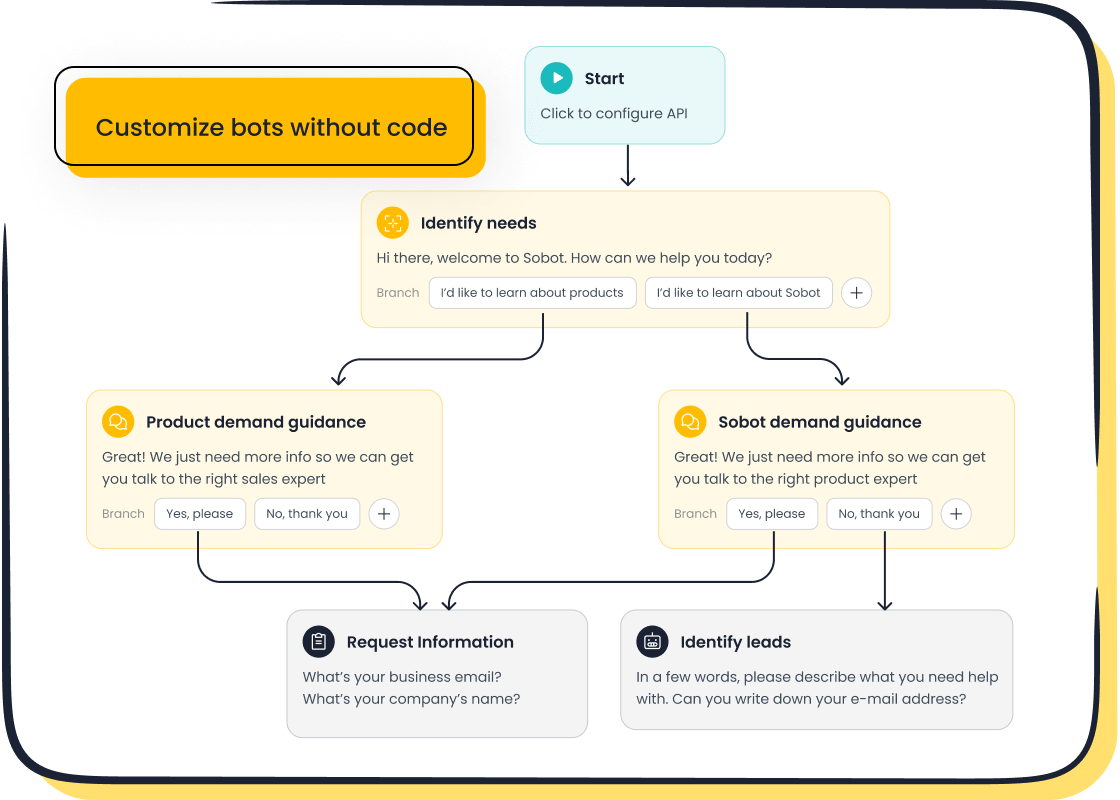

Sobot stands out among leading AI chatbots for business. The platform offers a user-friendly interface and supports omnichannel communication. Sobot’s AI chatbot features include multilingual support, 24/7 availability, and no-code setup. Businesses in retail, finance, and e-commerce use Sobot to automate customer service and boost efficiency. The 'Global Natural Language Processing Algorithms Market Innovation Trends 2025-2032' report highlights Sobot’s strengths in the NLP and AI chatbot market, showing its strong market position and advanced technology. OPPO, a global smartphone brand, improved its customer service with Sobot. After implementation, OPPO achieved an 83% chatbot resolution rate and a 94% positive feedback rate. These results show how Sobot delivers the best all-around paid chatbot experience for companies needing reliable automation.

Botium

Botium is a popular tool for chatbot software comparison and testing. It helps businesses test AI chatbots across different platforms. Botium supports automated testing, regression checks, and performance benchmarking. Companies use Botium to ensure their chatbots respond accurately and handle various scenarios. The tool works well for teams that want to improve chatbot quality before launch. Botium’s focus on automation makes it a strong choice for large-scale chatbot deployments.

Cyara

Cyara offers tools for testing and monitoring AI chatbots. The platform checks chatbot performance, voice quality, and user journeys. Cyara’s tools help businesses find issues in chatbot workflows and improve customer experience. The platform supports continuous testing, making it easier to maintain high-quality AI chatbots. Cyara is often used by companies that need to ensure their chatbots work well across multiple channels.

UserTesting

UserTesting provides a unique approach to chatbot software comparison. The platform collects user feedback and measures real-world performance. UserTesting tracks metrics like satisfaction ratings, trustworthiness, and task completion rates. In one case, a consumer chatbot saw a 75% increase in containment rates and a 2.4% drop in call volume after improvements. UserTesting combines quantitative data with user comments, helping businesses understand both strengths and weaknesses. This approach supports the best free AI chatbot experience by focusing on real user needs.

Platform Feature Comparison

The following table shows a comparison of top chatbots for business, focusing on AI chatbot features and performance:

| Platform | Best For | Key AI Chatbot Features | Notable Results / Metrics |

|---|---|---|---|

| Sobot | Omnichannel, Multilingual, No-code | 24/7 support, proactive messaging, analytics | 83% resolution rate (OPPO), 94% positive feedback |

| Botium | Automated Testing | Regression, performance, scenario testing | High accuracy in pre-launch testing |

| Cyara | Continuous Monitoring | Voice, chatbot, journey testing | Improved workflow reliability |

| UserTesting | User Experience Evaluation | Satisfaction, trust, task completion | 75% containment, 2.4% call reduction |

Tip: Businesses should choose the best chatbot for long context reasoning or the top chatbots for business based on their specific needs. AI chatbots comparison helps identify the right tools for automation, testing, and user feedback.

Learn more about Sobot’s AI chatbot features and customer stories.

AI Chatbot Platforms Comparison for Business Needs

Choosing the Right Platform

Selecting the best solution starts with a clear ai chatbot platforms comparison. Businesses should look at how different ai chatbots meet their unique needs. Recent research highlights that platforms have evolved from simple text bots to advanced AI-driven business tools. Some focus on easy integration with messaging apps, while others offer deep analytics or support for complex workflows. For example, Sobot provides omnichannel support, multilingual capabilities, and a no-code setup, making it suitable for companies in retail, finance, and e-commerce. Kompas, a market research AI tool, shows that structured, reliable reporting can help businesses make informed decisions. Companies should match platform strengths to their goals, whether they need fast deployment, advanced analytics, or strong integration with existing systems.

Tip: A thorough ai chatbot platforms comparison helps businesses align technology with their customer service and operational objectives.

Factors to Consider

When comparing ai chatbots, several factors stand out:

- Type of technology: Rule-based, AI, or hybrid models

- Integration: Ability to connect with CRM, contact centers, and messaging apps

- Analytics: Reporting on metrics like containment rate, first contact resolution, and customer satisfaction

- Security: Compliance with GDPR, CCPA, and other regulations

- Cost and scalability: Support for growth and budget alignment

- Features: Knowledge base integration, customization, multilingual support, and smooth escalation to human agents

Market data shows that chatbots can reduce query volume by up to 70% and respond three times faster than human agents (source). Businesses using ai chatbots often see a 30% reduction in support costs. These criteria ensure that the chosen platform supports both current and future business needs.

Implementation Steps

Effective deployment of ai chatbots follows a structured process:

- Process Mapping: Define business goals and map out customer journeys.

- Phased Deployment: Start with a pilot, then expand based on feedback.

- Customization: Tailor conversation flows and integrate with existing systems.

- User Training: Educate staff and users on new features.

- Continuous Improvement: Monitor performance and refine responses.

- Testing and Scaling: Test in real-world scenarios, then scale up as needed.

- Security and Maintenance: Ensure data protection and regular updates.

Studies show that these steps lead to higher user adoption and better operational outcomes. Sobot supports businesses through each phase, offering technical guidance and analytics dashboards for ongoing optimization. Multi-turn conversation evaluation, as used in large language model chatbots, helps measure real-world performance and guides improvements.

Note: Careful planning and ongoing evaluation are key to successful ai chatbot platforms comparison and deployment.

Evaluating chatbots with the right methods leads to better customer outcomes and business growth. Companies that use systematic evaluation see real results. For example, LambdaTest improved operator efficiency by 40%, and Eye-oo increased revenue by €177,000 after optimizing their chatbot. Sobot helps businesses in customer service achieve similar success by offering advanced analytics and easy-to-use tools. Companies should track key metrics, gather customer feedback, and update their chatbots regularly to stay ahead.

| Company | Outcome | Business Impact |

|---|---|---|

| LambdaTest | 40% more efficient operators | Faster support, better customer engagement |

| Eye-oo | €177,000 more revenue, 86% faster responses | Higher sales, improved customer satisfaction |

| Lumen Technologies | ~$50M annual savings, 4 hours saved weekly | Boosted productivity, better customer experience |

Sobot’s focus on innovation and customer-centric solutions makes it a trusted partner for businesses aiming to deliver excellent customer service.

FAQ

What are the most important metrics for evaluating chatbot performance?

Businesses often track resolution rate, customer satisfaction, and task success rate. For example, Sobot’s AI chatbot achieved an 83% resolution rate for OPPO. These metrics help companies evaluate chatbot performance and improve customer service. Learn more about chatbot metrics.

How can user feedback improve chatbot performance?

User feedback highlights real issues and helps teams evaluate chatbot performance. Sobot collects feedback after each chat session. This data shows where the chatbot needs improvement and helps increase customer satisfaction.

Why is AI chatbots comparison important for businesses?

AI chatbots comparison helps companies choose the best solution for their needs. Comparing features, scalability, and performance ensures the chatbot fits business goals. Sobot offers omnichannel support and analytics, making it a strong choice for many industries.

How does Sobot ensure data security in its chatbot solutions?

Sobot follows strict data protection standards, including GDPR compliance and data encryption. These measures keep customer information safe. Secure AI chatbots help businesses build trust and meet legal requirements.

Can chatbots handle multiple languages and channels?

Yes. Sobot’s AI chatbot supports multiple languages and works across channels like WhatsApp, SMS, and web chat. This feature helps businesses reach more customers and improve chatbot performance in global markets.

Tip: Regularly evaluate chatbot performance to keep up with changing customer needs and technology trends.

See Also

How To Select The Top Chatbot Software Solutions

Simple Steps To Add Chatbot Examples On Websites

Ways Chatbots Enhance Satisfaction For Online Shoppers