How Recurrent Neural Networks Work and Their Applications

A recurrent neural network (RNN) is a specialized type of neural network designed to handle sequential data. Unlike traditional networks, RNNs retain information from previous inputs, enabling them to process data where order and context are critical. This makes them ideal for tasks like revenue forecasting or anomaly detection. Their ability to handle inputs of varying lengths adds versatility, especially in natural language processing.

In customer service, RNNs play a transformative role. For example, Sobot's Chatbot leverages these networks to automate interactions, providing accurate, multilingual responses. By processing sequential customer queries efficiently, it enhances satisfaction and reduces operational costs.

What Are Recurrent Neural Networks (RNNs)?

Definition and Purpose

How RNNs differ from traditional neural networks

Recurrent neural networks (RNNs) stand out from traditional neural networks due to their unique ability to process sequential data. Unlike traditional networks, which treat each input independently, RNNs use hidden states to retain information from previous inputs. This allows them to "remember" context, making them ideal for tasks like language translation or speech recognition.

RNNs also employ a specialized training method called backpropagation through time (BPTT). This technique sums errors across time steps, enabling the network to learn from sequences effectively. Traditional networks, on the other hand, lack this capability as they do not share parameters across layers or process data in a temporal order. Furthermore, RNNs support various architectures, such as one-to-many or many-to-many, which are uncommon in traditional models.

Why RNNs are ideal for sequential data processing

RNNs excel at sequence processing because they dynamically adapt to changes in input over time. They maintain context by sharing parameters across time steps, ensuring consistent processing of sequences. Their memory capabilities allow them to remember past inputs, which is crucial for understanding patterns in sequential data. For example, in customer service, Sobot's Chatbot uses RNNs to analyze customer queries in real-time, providing accurate and context-aware responses.

Additionally, RNNs handle variable-length inputs, making them versatile for tasks like text generation or time-series analysis. Their ability to process data end-to-end simplifies training, as they learn directly from raw data without requiring extensive preprocessing.

Key Features of Recurrent Neural Networks

Memory mechanism and feedback loops

The memory mechanism is a defining feature of RNNs. It enables the network to retain information from earlier inputs and use it to influence future outputs. Feedback loops play a critical role here, allowing the network to pass information from one time step to the next. This mechanism is particularly useful in applications like natural language processing, where understanding the context of a sentence depends on previous words.

For instance, when Sobot's Chatbot interacts with customers, it uses this memory mechanism to maintain the flow of conversation. By remembering past interactions, the chatbot can provide more relevant and personalized responses, enhancing customer satisfaction.

Handling variable-length sequences in customer interactions

RNNs are well-suited for handling variable-length sequences, which is essential in customer service scenarios. Customer queries often vary in length and complexity. RNNs process these inputs dynamically, ensuring that each query receives the appropriate level of attention. This adaptability makes them invaluable for tools like Sobot's Chatbot, which operates across multiple channels and languages.

By leveraging RNNs, the chatbot can analyze and respond to diverse customer inquiries efficiently. Whether it's a short question about product availability or a detailed complaint, the chatbot uses its sequential processing capabilities to deliver accurate and timely responses.

How Do Recurrent Neural Networks Work?

Structure of a Recurrent Neural Network

Input, hidden, and output layers explained

A recurrent neural network (RNN) processes data through three main components: the input layer, hidden layer, and output layer.

- Input Layer: This layer receives the sequence input, such as text or time-series data, and passes it to the hidden layer for processing.

- Hidden Layer: The hidden layer contains interconnected neurons that process the current input and the hidden state from the previous time step. This mechanism enables the network to retain context over time.

- Activation Function: Non-linear activation functions, like tanh or ReLU, allow the network to learn complex patterns in the data.

- Output Layer: The output layer generates predictions or classifications based on the processed information.

These layers work together to handle sequential data effectively. For example, Sobot's Chatbot uses this structure to analyze customer queries and provide accurate responses.

Role of recurrent connections in retaining information

Recurrent connections play a crucial role in RNNs by creating loops that allow the hidden state from one time step to influence the next. This looping mechanism acts as a memory, enabling the network to remember past inputs and use them to process current ones. For instance, when analyzing a sentence, the RNN uses recurrent connections to understand the context of each word based on the preceding ones. This capability is vital for tasks like language translation and customer interaction analysis.

Learning Mechanism of RNNs

Backpropagation Through Time (BPTT) and its significance

Backpropagation Through Time (BPTT) is the primary training method for RNNs. It allows the network to learn from sequences by propagating errors backward through time steps. This process ensures that the network captures temporal dependencies in the data. For example, the loss function in BPTT considers both current and past inputs, making it ideal for sequence models like Sobot's Chatbot, which relies on accurate context understanding.

Challenges like vanishing gradients in training

Training RNNs presents challenges like vanishing and exploding gradients. The vanishing gradient problem occurs when gradients become too small during backpropagation, making it difficult for the network to learn long-term dependencies. Conversely, exploding gradients can cause instability by producing excessively large values. These issues complicate the training process, especially for deep learning tasks involving long sequences.

Variants of Recurrent Neural Networks

Long Short-Term Memory (LSTM) networks

Long Short-Term Memory networks address the limitations of standard RNNs by introducing a more complex architecture. They use three gates—input, forget, and output—to control the flow of information. This design allows LSTMs to retain relevant data over long sequences while discarding unnecessary information. For instance, LSTMs excel in tasks like speech recognition and customer sentiment analysis, where long-term memory is essential.

Gated Recurrent Units (GRUs)

Gated Recurrent Units simplify the LSTM architecture by using only two gates—update and reset. This streamlined design reduces computational complexity while maintaining effective performance. GRUs are particularly useful for real-time applications, such as Sobot's Chatbot, where fast response times are critical. Their efficiency makes them suitable for mobile and edge computing environments.

Applications of Recurrent Neural Networks

Enhancing Customer Service with RNNs

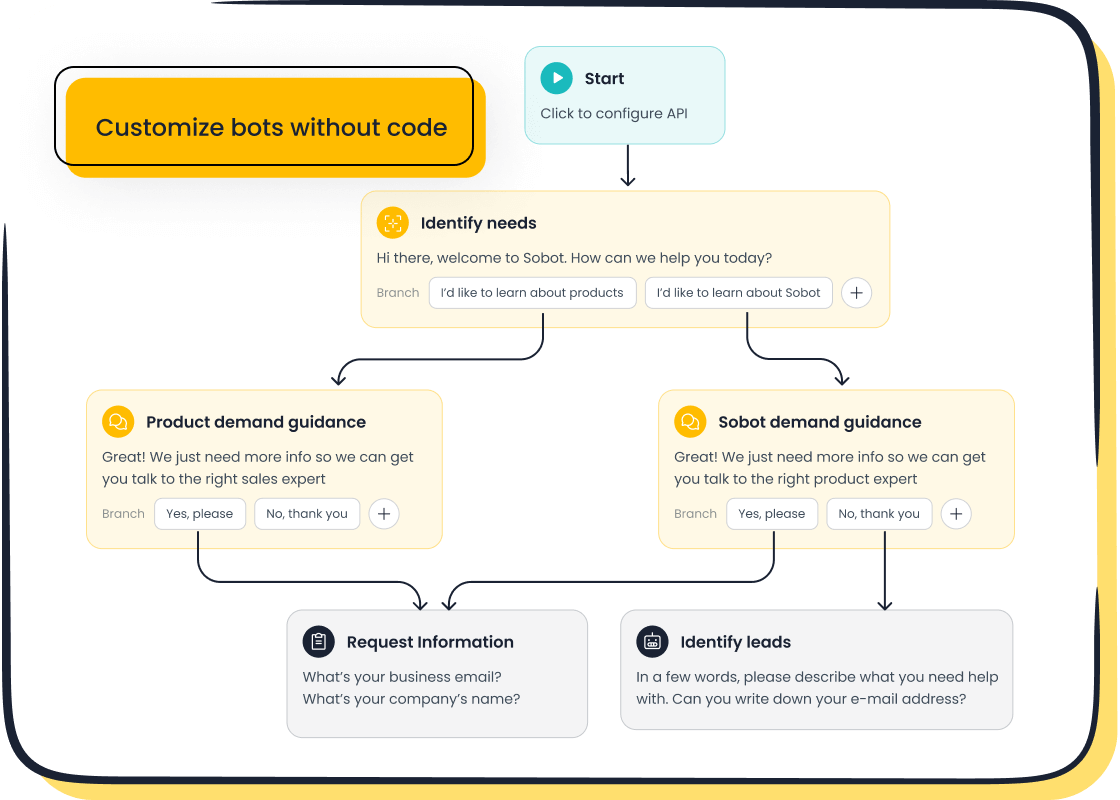

Role of RNNs in chatbots like Sobot's Chatbot

Recurrent neural networks play a pivotal role in modern chatbots, including Sobot's AI Chatbot. By leveraging RNNs, chatbots can process sequential customer queries and provide context-aware responses. For instance, when a customer asks follow-up questions, the chatbot uses its memory mechanism to retain the context of the conversation. This ensures accurate and relevant replies, enhancing the overall user experience. Sobot's Chatbot, powered by RNNs, also supports multilingual interactions, making it an ideal solution for businesses with diverse customer bases.

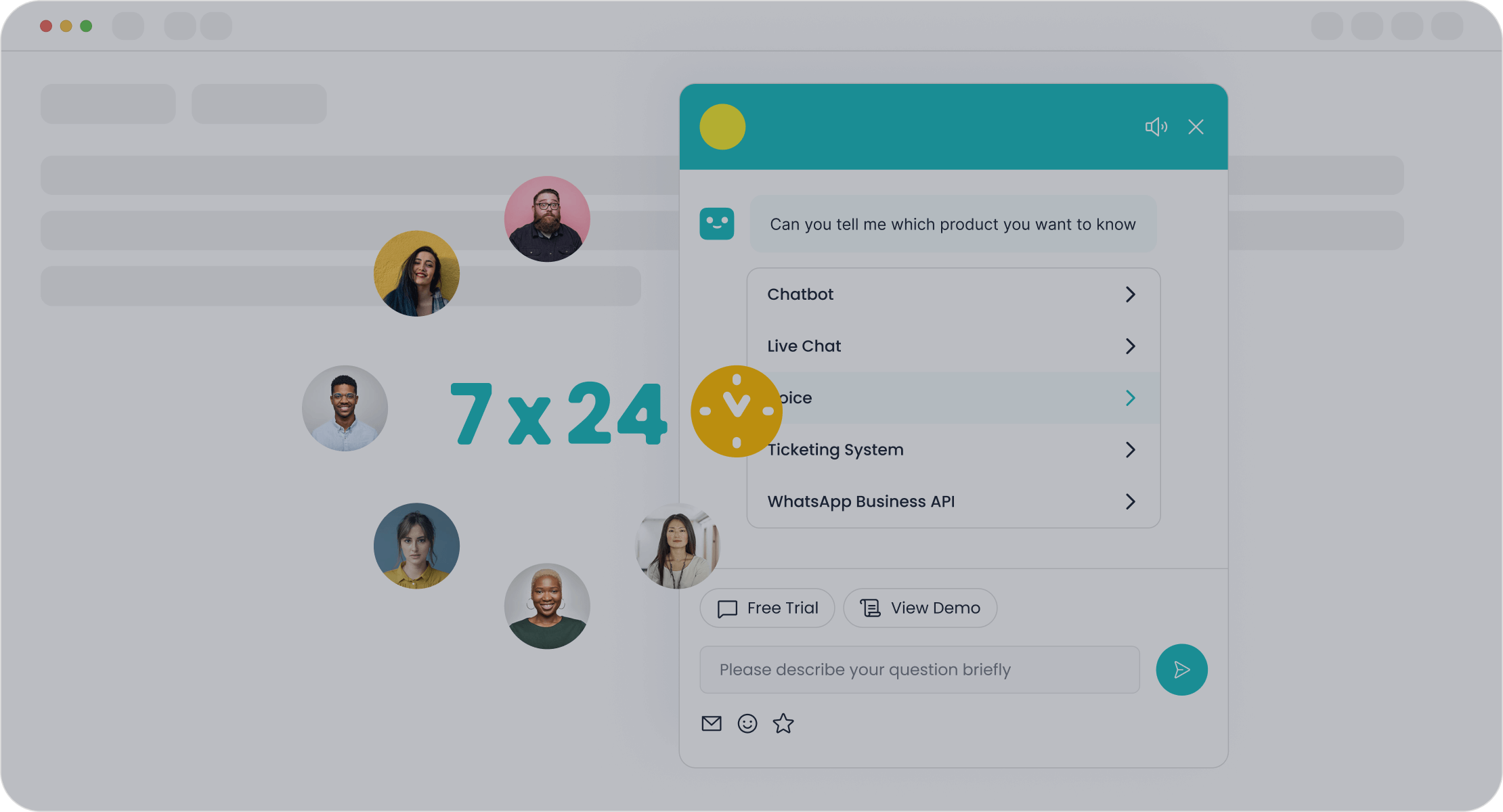

Automating customer interactions and improving efficiency

RNNs automate repetitive customer interactions, significantly improving efficiency. Sobot's Chatbot uses RNNs to handle common queries autonomously, such as order tracking or FAQs. This reduces the workload for human agents, allowing them to focus on complex issues. The chatbot operates 24/7, ensuring customers receive instant support at any time. By automating these tasks, businesses can save costs and improve customer satisfaction.

Natural Language Processing (NLP)

Text generation and sentiment analysis

Recurrent neural networks excel in natural language processing tasks like text generation and sentiment analysis. They process text data one word at a time, capturing sequential dependencies to understand context. For example:

- RNNs predict the next word in a sentence, enabling text generation for applications like chatbots and content creation.

- They analyze sequences of words to determine sentiment in reviews or social media posts, helping businesses gauge customer opinions.

These capabilities make RNNs indispensable for tasks requiring contextual understanding of language.

Machine translation and conversational AI

RNNs are widely used in machine translation and conversational AI. Their ability to handle variable-length input sequences ensures accurate translations, even for complex sentences. For example, RNNs capture the context within spoken language, making them ideal for translating customer inquiries in real-time. The table below highlights their benefits:

| Advantage | Description |

|---|---|

| Contextual Understanding | RNNs capture the context within spoken language for accurate transcription. |

| Variable-Length Input Handling | They process input sequences of varying lengths, accommodating natural variability. |

| Sequential Data Processing | RNNs handle sequential data, making them ideal for language translation tasks. |

These features enable conversational AI systems to deliver coherent and context-aware responses.

Speech Recognition and Audio Processing

Voice-to-text systems for customer support

RNNs enhance voice-to-text systems, which are essential for customer support. They improve transcription accuracy, enabling businesses to analyze customer calls effectively. For example:

- BiRNNs transcribe and analyze customer interactions, providing insights into satisfaction levels.

- Chatbots with speech recognition engage in voice conversations, improving user experience and problem resolution.

These systems ensure seamless communication between customers and support teams.

Audio classification and real-time assistance

Recurrent neural networks also power audio classification and real-time assistance. They adapt to various accents and speech patterns, making them robust for diverse user bases. For instance:

- RNNs detect emotions by analyzing tone and pitch, helping customer service teams tailor their responses.

- They classify audio data, enabling real-time assistance in applications like virtual assistants and call routing.

These advancements improve the efficiency and personalization of customer interactions.

Time-Series Analysis

Predicting customer behavior and trends

Recurrent neural networks excel in time-series analysis, making them a powerful tool for predicting customer behavior and trends. These networks process sequences of data, such as purchase histories or browsing patterns, to identify recurring behaviors. By analyzing this information, businesses can anticipate customer needs and tailor their strategies accordingly.

For example, Sobot's AI Chatbot uses RNNs to analyze customer interactions across various channels. It identifies patterns in queries, helping businesses predict future customer concerns. This predictive capability allows you to proactively address issues, improving satisfaction and loyalty. Additionally, RNNs help businesses optimize marketing campaigns by forecasting customer responses to promotions or new products. These insights enable you to allocate resources effectively and maximize returns.

Applications in stock price forecasting and anomaly detection

RNNs have proven their value in stock price forecasting and anomaly detection. By analyzing historical financial data, these networks generate accurate predictions of future stock prices. For instance, a Gated Recurrent Unit (GRU) model has been used to forecast Starbucks stock prices. Metrics like mean squared error (MSE) and mean absolute error (MAE) evaluate the model's performance, ensuring reliable results.

In anomaly detection, RNNs learn normal patterns in data streams and identify deviations that may signal issues. This application is crucial in industries like finance and cybersecurity. For example, RNNs can detect unusual transactions in real-time, helping you prevent fraud. Similarly, they monitor system logs to identify potential threats, ensuring operational stability.

These use cases highlight the versatility of recurrent neural networks in handling complex sequences. Whether you're predicting customer trends or safeguarding your business, RNNs offer robust solutions for time-sensitive challenges.

Challenges of Recurrent Neural Networks

Common Issues in RNNs

Vanishing and exploding gradients

Recurrent neural networks often face challenges during training due to vanishing and exploding gradients. These problems arise when gradients, which guide the adjustment of weights, become either too small or excessively large.

- Vanishing Gradient Problem: Gradients shrink exponentially as they propagate backward through time steps. This makes it difficult for the network to learn from earlier inputs, especially in long sequences. For example, when processing customer queries spanning multiple interactions, the network may fail to retain crucial context.

- Exploding Gradient Problem: Gradients grow exponentially, leading to instability in training. This can cause the network to produce unreliable predictions or fail to converge altogether.

Both issues hinder the learning process, particularly for tasks requiring long-term dependencies, such as speech recognition or sequential data analysis.

Difficulty in capturing long-term dependencies

RNNs struggle to capture long-term dependencies due to the vanishing gradient problem. As sequences lengthen, the network loses the ability to update weights associated with earlier time steps. This limits its capacity to learn from distant past information. For instance, in machine translation, understanding the context of a sentence often requires retaining information from earlier words. Without effective memory mechanisms, the network's performance on such tasks deteriorates.

Solutions to RNN Challenges

Introduction of LSTMs and GRUs

Long short-term memory networks (LSTMs) and gated recurrent units (GRUs) were developed to address these challenges. Both architectures introduce mechanisms that manage long-term dependencies effectively.

- LSTMs: These networks use gates to control the flow of information. The forget gate discards irrelevant data, while the input and output gates decide what to store and retrieve from the cell state. This design allows LSTMs to retain important context over long sequences, making them ideal for tasks like text generation and customer sentiment analysis.

- GRUs: GRUs simplify the LSTM architecture by using fewer gates. They combine the update and reset gates to manage memory efficiently. GRUs are computationally lighter, making them suitable for real-time applications like Sobot's Chatbot, which processes multilingual customer queries seamlessly.

Use of attention mechanisms in modern architectures

Attention mechanisms further enhance the capabilities of RNNs by allowing networks to focus on relevant parts of the input data. These mechanisms assign different weights to each word or element in a sequence, improving contextual understanding.

- Models with attention mechanisms excel in tasks like language translation, where understanding specific words in a sentence is crucial.

- Attention mechanisms also improve performance on long sequences by enabling the network to prioritize important information, reducing reliance on distant memory.

For example, in customer service, attention mechanisms help chatbots like Sobot's AI Chatbot deliver accurate responses by focusing on key aspects of customer queries. This ensures better predictions and enhances user satisfaction.

The Role of RNNs in Sobot's Chatbot

Leveraging RNNs for Customer Interaction

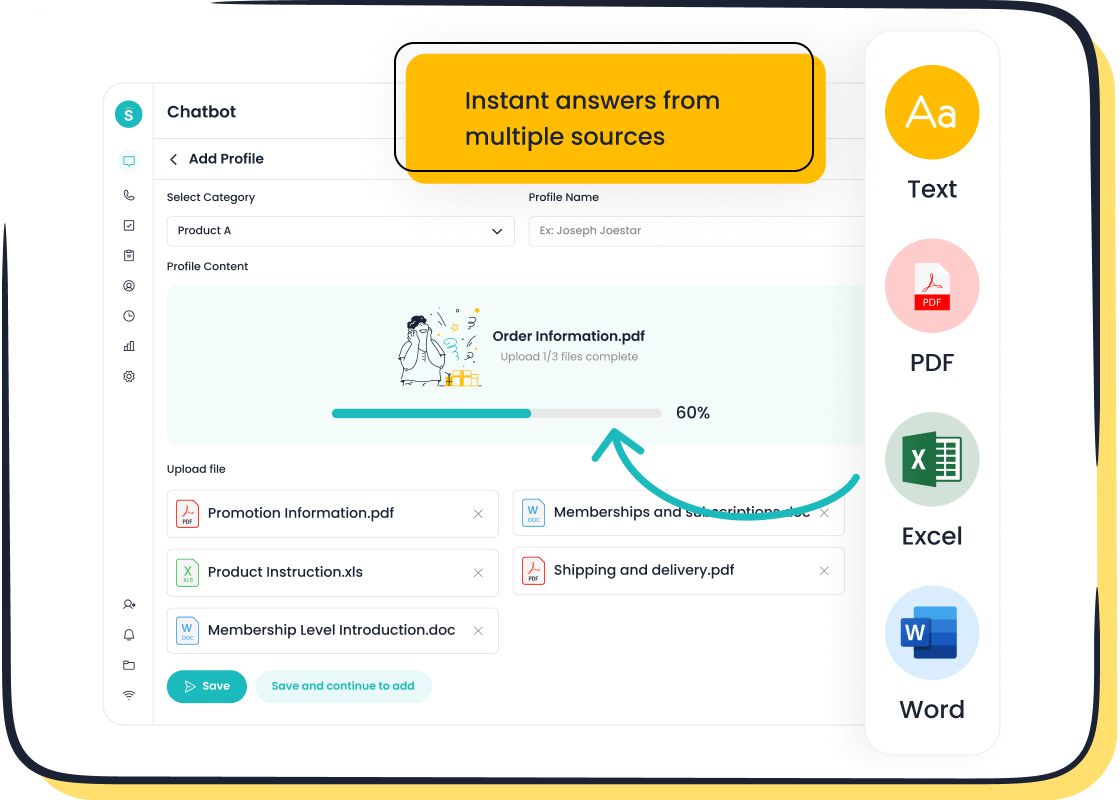

How Sobot's Chatbot uses RNNs for multilingual support

Sobot's Chatbot uses recurrent neural networks to deliver seamless multilingual support. These networks process sequential data, enabling the chatbot to understand and respond in multiple languages. By retaining the context of conversations through hidden states, the chatbot ensures accurate translations and context-aware replies. This feature is especially valuable for businesses with global customers. For instance, when a customer switches between languages mid-conversation, the chatbot adapts without losing context. This adaptability enhances communication and ensures a smooth user experience.

The chatbot’s multilingual capabilities also reduce the need for human agents to handle language-specific queries. This not only saves time but also improves efficiency. Whether your business operates in retail, finance, or gaming, Sobot's Chatbot ensures that language barriers never hinder customer interactions.

Enhancing customer satisfaction through real-time responses

Real-time responses are critical for customer satisfaction, and Sobot's Chatbot excels in this area. By leveraging bidirectional RNNs, the chatbot predicts and processes customer queries accurately and continuously. This allows it to engage in voice conversations, improving problem resolution and overall satisfaction. For example, when a customer asks a follow-up question, the chatbot uses its memory mechanism to provide a relevant and immediate reply.

This capability ensures that customers receive instant support, even during peak hours. The chatbot operates 24/7, offering uninterrupted assistance. Whether it’s answering FAQs or resolving complex issues, the chatbot’s real-time responses create a positive impression and build trust with your customers.

Benefits of RNNs in Contact Center Solutions

Automating repetitive queries to reduce agent workload

Recurrent neural networks enable Sobot's Chatbot to automate repetitive queries effectively. Tasks like order tracking, appointment scheduling, and FAQs are handled autonomously. This reduces the workload for human agents, allowing them to focus on more complex customer needs. For example, OPPO, a leading smart device company, achieved an 83% chatbot resolution rate by using Sobot's solutions. This automation not only improves efficiency but also ensures consistent service quality.

By automating these tasks, your business can scale customer support without increasing costs. The chatbot’s ability to handle high volumes of queries ensures that no customer is left waiting, even during busy periods.

Improving efficiency and cutting costs for businesses

Sobot's Chatbot improves operational efficiency while significantly reducing costs. By operating 24/7, the chatbot eliminates the need for additional agents during off-hours. It also reduces expenses by triaging queries before escalating them to human agents. Businesses using Sobot's Chatbot have reported up to 50% savings on customer support costs.

The chatbot’s learning capabilities ensure continuous improvement. As it processes more interactions, it becomes better at understanding customer needs. This not only enhances efficiency but also boosts customer satisfaction. For businesses aiming to optimize resources, Sobot's Chatbot offers a cost-effective and impactful solution.

Recurrent neural networks (RNNs) have revolutionized the way sequential data is processed, offering unmatched capabilities in understanding patterns over time. Their versatility shines in applications like natural language processing and time-series analysis:

- In NLP, RNNs predict the next word in a sentence and analyze sentiment in text.

- For time-series data, they forecast trends in financial markets and predict weather conditions using historical data.

Sobot's Chatbot exemplifies the practical benefits of RNNs. Bidirectional RNNs enhance voice recognition, enabling the chatbot to understand customer queries with precision. These networks also improve natural language understanding, allowing the chatbot to engage in meaningful conversations. Additionally, voice-based support powered by RNNs transcribes and analyzes customer calls, providing actionable insights to improve satisfaction.

By leveraging RNNs, you can transform customer interactions, streamline operations, and achieve greater efficiency across industries.

FAQ

1. What makes recurrent neural networks (RNNs) unique?

RNNs process sequential data by retaining information from previous inputs. This memory mechanism allows them to understand context, making them ideal for tasks like language translation and customer service. For example, Sobot's Chatbot uses RNNs to provide accurate, context-aware responses in real-time.

2. How do RNNs improve customer service?

RNNs automate repetitive tasks like answering FAQs and tracking orders. Sobot's Chatbot, powered by RNNs, operates 24/7 and supports multiple languages. This reduces agent workload and ensures customers receive instant, accurate assistance, even during peak hours.

3. Can RNNs handle multilingual interactions?

Yes, RNNs excel at processing multilingual data. Sobot's Chatbot uses this capability to deliver seamless support in various languages. It adapts to customer preferences, ensuring smooth communication and enhancing satisfaction across global markets.

4. What challenges do RNNs face in training?

RNNs often encounter vanishing or exploding gradients during training. These issues make it hard to learn long-term dependencies. Advanced architectures like LSTMs and GRUs, used in Sobot's Chatbot, solve these problems by managing memory more effectively.

5. How does Sobot's Chatbot benefit businesses?

Sobot's Chatbot improves efficiency by automating customer interactions. It saves up to 50% on support costs and boosts productivity by 70%. Its multilingual and 24/7 capabilities make it a valuable tool for businesses aiming to enhance customer satisfaction.

See Also

Understanding The Functionality Of IVR Voice Recognition Software

Best 10 Websites Implementing Chatbots This Year

Key Features Of Interactive Voice Response System Software