How Long Short-Term Memory Works in Neural Networks

Long short-term memory (LSTM) networks are a powerful type of recurrent neural network (RNN) designed to handle sequential data. Unlike traditional RNNs, LSTMs excel at managing long-term dependencies, making them essential for tasks like language modeling and time series prediction. For example, LSTMs play a key role in machine translation and text summarization by maintaining context over extended sequences. They also shine in stock market predictions, where associating past trends with current data is critical.

Despite the rise of Transformer-based models, innovations like xLSTM highlight the ongoing importance of LSTM networks in deep learning. Companies like Sobot leverage these advancements to enhance AI-driven tools, ensuring efficient and accurate customer interactions. By addressing the vanishing gradient problem, long short-term memory networks continue to transform how you process and analyze sequential data.

What is Long Short-Term Memory (LSTM)?

Definition and Purpose of LSTM

What does "Long Short-Term Memory" mean?

Long short-term memory refers to a specialized type of recurrent neural network (RNN) designed to retain information over extended sequences. Unlike traditional RNNs, which struggle to maintain context over long periods, LSTMs excel at remembering both recent and distant data. This capability makes them ideal for tasks like language translation, where understanding earlier words in a sentence is crucial for accurate interpretation. By using a unique memory structure, LSTMs allow you to process sequential data more effectively, ensuring that important information is neither lost nor overwritten.

How LSTMs fit into the family of RNNs

LSTMs belong to the broader family of recurrent neural networks, but they stand out due to their advanced architecture. While standard RNNs process sequential data, they often face challenges like the vanishing gradient problem, which limits their ability to learn from long-term dependencies. LSTMs overcome this by incorporating gates—input, forget, and output—that control the flow of information. These gates enable LSTMs to decide what to remember, what to forget, and when to output information. This makes LSTMs more sophisticated and capable of handling complex tasks like machine translation and speech recognition.

| Feature | RNN | LSTM |

|---|---|---|

| Memory retention | Poor | Excellent |

| Gradient issues | Vanishing/exploding gradients | Mitigated through gating mechanisms |

| Suitability for long sequences | Limited | Highly suitable |

Why LSTMs Are Essential for Sequential Data

Challenges with traditional RNNs

Traditional recurrent neural networks face significant challenges when processing sequential data. One major issue is the vanishing gradient problem, where gradients shrink during backpropagation, making it difficult for the network to learn long-term dependencies. This limitation affects tasks like financial forecasting, where understanding trends over time is critical. Additionally, RNNs lack mechanisms to selectively retain or discard information, leading to poor performance on complex tasks requiring memory over extended sequences.

How LSTMs solve the vanishing gradient problem

LSTMs address these challenges by introducing a sophisticated memory mechanism. They use three gates—input, forget, and output—to manage the flow of information. The input gate determines what new information to store, the forget gate decides what to discard, and the output gate controls what to pass to the next step. This selective updating of the cell state allows LSTMs to preserve long-term dependencies effectively. For example, in predictive text systems, LSTMs analyze ongoing conversations to suggest relevant words, showcasing their ability to handle long sequences with precision.

LSTMs have transformed sequential data processing by overcoming the limitations of traditional RNNs. Their advanced architecture ensures better retention of critical information, making them indispensable for modern AI applications.

Understanding LSTM Architecture

Key Components of LSTM Architecture

The cell state: The "memory" of the LSTM

The cell state is the backbone of the long short-term memory (LSTM) network. It acts as the "memory" that carries information across time steps, enabling the network to retain long-term dependencies. Unlike traditional recurrent neural networks (RNNs), which rely solely on hidden states, the cell state in LSTMs ensures that critical information is not lost as data flows through the network. This feature makes LSTMs highly effective for tasks like language modeling and time series prediction, where remembering earlier inputs is essential. For example, in customer service chatbots like Sobot's AI Chatbot, the cell state helps retain the context of a conversation, ensuring accurate and relevant responses.

The gates: Input, forget, and output gates

LSTM architecture includes three gates that regulate the flow of information:

- Forget Gate: Decides which parts of the cell state to discard.

- Input Gate: Determines what new information to add to the cell state.

- Output Gate: Controls what information to output as the hidden state.

These gates work together to manage the balance between retaining and forgetting information. For instance, in a multilingual chatbot, the forget gate might discard irrelevant language data, while the input gate updates the memory with new user queries.

How LSTMs Process Data

Step-by-step flow of data through an LSTM

Processing data through an LSTM involves several steps:

- Input: The network receives a vector representing the current observation.

- Forget Gate: Filters out unnecessary information from the previous cell state.

- Input Gate: Updates the cell state with new, relevant data.

- Cell State Update: Combines the filtered old state with the new candidate values.

- Output Gate: Produces the hidden state for the next time step or final output.

This structured flow allows LSTMs to handle complex sequential data effectively. For example, Sobot's AI Chatbot uses this process to analyze customer queries and provide accurate, real-time responses.

How the cell state is updated

The cell state updates through a combination of the forget and input gates. The forget gate removes outdated information, while the input gate adds new data. This mechanism ensures that the cell state retains only the most relevant long-term memory. For instance, in predictive text systems, this process helps the model suggest words based on the context of the entire sentence.

Unique Features of LSTMs

Retaining and forgetting information

LSTMs excel at balancing retention and forgetting. The gating mechanisms allow the network to selectively keep or discard information, addressing the limitations of traditional recurrent neural networks. This capability is crucial for applications like sentiment analysis, where understanding the tone of a conversation requires both short-term and long-term memory.

Handling long-term and short-term dependencies

The dual memory system of LSTMs—cell state for long-term memory and hidden state for short-term memory—makes them uniquely suited for sequential data. Unlike standard RNNs, LSTMs can learn long-term dependencies without suffering from the vanishing gradient problem. This feature is vital for tools like Sobot's AI Chatbot, which must understand both the immediate context and the overall conversation history to deliver meaningful interactions.

Why Use LSTMs in Customer Interaction Solutions?

Advantages of LSTMs for Customer Service

Better handling of long sequences in customer queries

Long short-term memory (LSTM) networks excel at processing sequential data, making them ideal for customer service scenarios. When customers submit lengthy queries or describe complex issues, LSTMs analyze the entire sequence without losing context. This capability ensures that your AI-powered tools, like chatbots, can understand and respond accurately. For example, Sobot's AI Chatbot uses LSTMs to retain the context of multilingual conversations, ensuring that even long exchanges remain coherent and relevant. By handling long sequences effectively, LSTMs improve the quality of customer interactions and reduce the need for human intervention.

Improved accuracy in understanding customer intent

LSTMs enhance your ability to predict customer intent by modeling sequential dependencies in interaction data. They identify patterns in past behaviors, such as recurring purchases or service requests, to anticipate future needs. This precision is crucial for delivering personalized experiences. For instance, an LSTM-powered chatbot can detect when a customer is frustrated and escalate the issue to a human agent. By filtering out irrelevant information and focusing on meaningful data, LSTMs ensure that your customer service tools provide accurate and timely responses.

Applications in Contact Centers

Enhancing AI-powered tools like the Sobot Chatbot

LSTMs bring several advantages to AI-powered tools in contact centers.

- They enable intelligent ticket routing by analyzing customer interaction history to predict urgency and classify requests.

- They enhance sentiment analysis, helping you identify trends and proactively address customer concerns.

- They forecast support workload, allowing your team to allocate resources effectively during peak times.

Sobot's AI Chatbot leverages these capabilities to deliver seamless customer experiences. Its LSTM-driven architecture ensures accurate intent recognition and efficient query resolution, boosting both productivity and customer satisfaction.

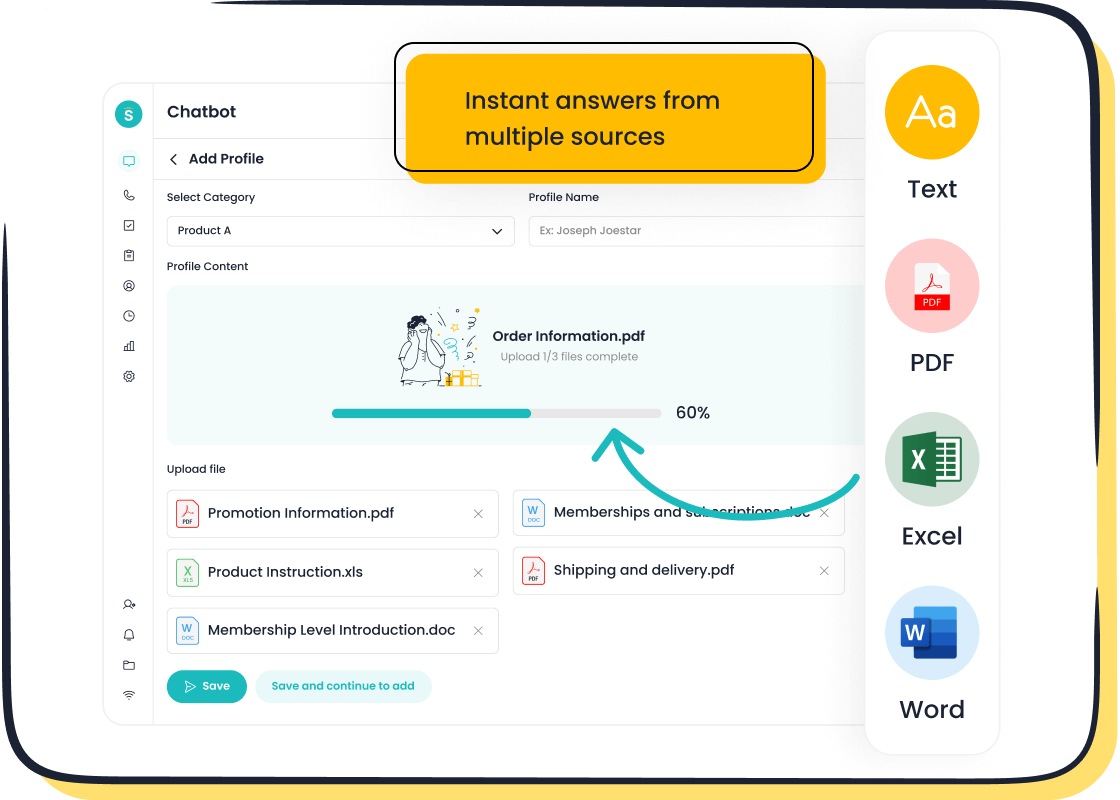

Automating customer interactions with sequential data

LSTMs automate repetitive tasks by analyzing sequential data, such as chat logs or email threads. This automation streamlines processes like ticket prioritization and knowledge base updates. For example, LSTMs can identify frequently asked questions and automatically generate support documentation. In Sobot's contact center solutions, this functionality reduces agent workload and ensures faster response times, allowing your team to focus on more complex issues.

Real-World Examples

Sentiment analysis for customer feedback

LSTMs play a vital role in sentiment analysis by capturing the nuances of human emotions in text. For instance, an e-commerce company might use LSTMs to analyze customer reviews and prioritize negative feedback for immediate action. Similarly, Sobot's AI Chatbot can assess sentiment in real-time conversations, enabling proactive support and improving customer satisfaction.

Predicting customer behavior in retail and finance

LSTMs help businesses predict customer behavior by analyzing historical data. In retail, they enable personalized recommendations and targeted marketing campaigns. For example, an LSTM model might suggest products based on a customer’s previous purchases. In finance, LSTMs anticipate recurring transactions, such as monthly bill payments, to offer tailored financial advice. These applications enhance customer engagement and drive business growth.

LSTM in Action: Sobot Chatbot and Beyond

How Sobot Chatbot Leverages LSTM

Processing multilingual customer queries

The Sobot AI Chatbot uses long short-term memory (LSTM) networks to process multilingual customer queries with remarkable accuracy. LSTMs excel at retaining context across long sequences, making them ideal for handling complex, multi-language interactions. For example, when a customer switches between languages mid-conversation, the chatbot uses LSTM to maintain the flow and provide accurate responses. This capability ensures seamless communication, regardless of the language used. By leveraging LSTM, Sobot's chatbot delivers a personalized experience, enhancing customer satisfaction and reducing the need for human intervention.

Enhancing real-time intent recognition

LSTM networks enable Sobot's chatbot to recognize customer intent in real time. By analyzing sequential data, such as the order of words in a query, LSTM identifies patterns that reveal the customer's needs. For instance, if a user expresses frustration, the chatbot can escalate the issue to a human agent immediately. This real-time intent recognition improves response accuracy and ensures timely resolutions, making your customer interactions more efficient and effective.

Benefits of LSTM in Sobot's Contact Center Solutions

Boosting efficiency and reducing costs

LSTM networks optimize resource allocation in contact centers. They predict support demand, allowing you to schedule staff strategically during peak periods. This reduces response times and enhances customer satisfaction. Additionally, avoiding overstaffing during low-demand periods lowers operational costs.

| Benefit | Description |

|---|---|

| Resource Optimization | Predicting support demand allows for strategic staffing and scheduling during peak periods. |

| Improved Customer Satisfaction | Better preparation for busy times reduces response times, leading to higher customer satisfaction. |

| Cost Savings | Avoiding overstaffing during low-demand periods reduces operational costs. |

Improving customer satisfaction with accurate responses

LSTM-powered tools like Sobot's chatbot ensure accurate responses by retaining context and understanding customer intent. This precision reduces errors and enhances the overall customer experience. For example, the chatbot can resolve queries faster, leading to higher satisfaction rates. Accurate responses also build trust, encouraging customers to return for future interactions.

Broader Applications of LSTMs

Time series forecasting in customer support

LSTMs excel in time series forecasting tasks, such as predicting support ticket volumes or peak interaction times. Their ability to capture long-term dependencies in sequential data makes them invaluable for modeling trends over extended periods. For instance, you can use LSTMs to forecast seasonal spikes in customer inquiries, enabling better resource planning. This predictive capability ensures your team is always prepared to meet customer demands efficiently.

Speech recognition for voice-based interactions

LSTMs play a crucial role in speech recognition systems for voice-based customer interactions.

- They enhance emotion detection by analyzing complex speech patterns, improving the quality of customer interactions.

- These models identify subtle variations in tone, enabling accurate recognition of customer emotions.

By integrating LSTM into voice-based tools, you can deliver personalized and empathetic support, elevating the customer experience.

The Future of Long Short-Term Memory in AI

Evolving Role of LSTMs

Integration with advanced architectures like Transformers

LSTMs continue to evolve by integrating with newer architectures like Transformers, creating hybrid models that combine the strengths of both. While Transformers excel at parallel processing and capturing long-range dependencies through self-attention mechanisms, LSTMs shine in sequential data processing. This integration allows you to leverage the efficiency of Transformers alongside the memory retention capabilities of LSTMs.

- Transformers process sequences in parallel, reducing training time significantly.

- Self-attention mechanisms in Transformers improve contextual understanding over long sequences.

- Unlike LSTMs, Transformers maintain consistent computational costs as input sequences grow.

For example, combining LSTMs with Transformers can enhance customer service tools by improving real-time intent recognition and context retention. This hybrid approach ensures that your AI systems remain both efficient and accurate, even when handling complex, multi-step interactions.

Expanding applications in customer service and beyond

LSTMs are expanding their role in customer service by enabling predictive analytics and resource optimization. By analyzing historical data, such as ticket volumes and customer behavior, LSTMs forecast future support demands. For instance, a retail company might use LSTMs to predict a surge in customer inquiries during the holiday season. This insight helps you onboard temporary staff in advance, ensuring smooth operations.

Beyond customer service, LSTMs are finding applications in areas like healthcare, where they analyze patient records to predict health outcomes, and in finance, where they model stock market trends. These advancements demonstrate the versatility of LSTMs in solving real-world problems across industries.

Innovations in Contact Center Solutions

Combining LSTMs with Sobot's AI-driven tools

Sobot integrates LSTMs into its AI-driven tools to enhance customer interaction solutions. LSTM networks analyze sequential data to predict urgency and classify support requests. This capability enables intelligent ticket routing, directing complex issues to senior agents while automating routine tasks. For example, Sobot's AI Chatbot uses LSTMs to prioritize critical issues, ensuring faster response times for urgent matters.

By combining LSTMs with Sobot's omnichannel support, you can deliver seamless customer experiences across platforms like WhatsApp and SMS. This integration reduces manual work, improves resource allocation, and boosts overall efficiency in your contact center.

Enhancing omnichannel customer experiences

LSTMs play a pivotal role in enhancing omnichannel customer experiences by retaining context across multiple communication channels. Whether a customer interacts via live chat, email, or voice, LSTMs ensure that no information is lost. For instance, Sobot's AI Chatbot uses LSTMs to maintain conversation history, allowing you to provide consistent and personalized responses.

This capability not only improves customer satisfaction but also builds trust. Customers feel valued when their concerns are addressed seamlessly, regardless of the channel they use. By leveraging LSTMs, you can create a unified and efficient customer journey, setting your business apart in a competitive market.

Long Short-Term Memory (LSTM) networks are a cornerstone of modern AI, excelling in tasks that require sequential data processing. Their ability to remember critical information over long periods makes them indispensable for applications like language modeling, speech recognition, and time series forecasting.

LSTMs address the vanishing gradient problem, enabling accurate predictions and better performance compared to traditional RNNs. This capability is vital for customer service tools, such as Sobot's AI Chatbot, which relies on LSTMs to understand customer intent and deliver precise responses.

As AI evolves, LSTMs will continue to integrate with advanced architectures like LiteLSTM, enhancing efficiency and expanding their applications. Their unique ability to manage long-term dependencies ensures they remain a critical component of innovative solutions in customer interaction and beyond.

FAQ

What makes LSTMs better than traditional RNNs for customer service?

LSTMs outperform traditional RNNs by solving the vanishing gradient problem. They retain long-term dependencies, which is essential for understanding complex customer queries. For example, Sobot's AI Chatbot uses LSTMs to maintain conversation context, ensuring accurate responses even during lengthy interactions. This improves customer satisfaction and reduces the need for human intervention.

How does Sobot's AI Chatbot use LSTMs to improve efficiency?

Sobot's AI Chatbot leverages LSTMs to analyze sequential data, such as customer queries, in real time. By retaining context and understanding intent, it resolves issues autonomously. This boosts productivity by 70% and reduces costs by up to 50%, making it a cost-effective solution for businesses of all sizes.

Can LSTMs handle multilingual customer interactions?

Yes, LSTMs excel at processing multilingual data. Sobot's AI Chatbot uses LSTMs to seamlessly switch between languages during conversations. For instance, if a customer alternates between English and Spanish, the chatbot retains context and delivers accurate responses, ensuring a smooth and personalized experience.

Why are LSTMs important for sentiment analysis?

LSTMs capture the nuances of human emotions in text by analyzing sequential patterns. This makes them ideal for sentiment analysis. For example, Sobot's AI Chatbot uses LSTMs to detect frustration in customer messages, enabling proactive support and improving overall satisfaction.

How do LSTMs enhance resource planning in contact centers?

LSTMs predict trends in customer interactions, such as peak inquiry times, by analyzing historical data. Sobot's contact center solutions use this capability to optimize staffing during busy periods. This ensures faster response times, reduces costs, and improves customer experiences.

See Also

Understanding The Functionality Of IVR Voice Recognition Software

Enhancing Efficiency With AI-Powered Customer Service Solutions

A Comprehensive Overview Of AI Software For Call Centers