Automatic Speech Recognition vs NLP in 2025

The automatic speech recognition definition is simple: it converts spoken words into text. Natural language processing then understands the meaning behind that text. The global voice recognition market is expected to reach USD 36.08 billion by 2030. This growth powers advanced tools.

Think of it this way: Automatic Speech Recognition (ASR) handles the transcription. It is the transcriber. NLP is the analyst who understands the intent.

In 2025, speech to text is a common term for this transcription process. The Sobot call center uses Sobot AI to combine speech to text and NLP, creating powerful, human-like customer interactions.

UNDERSTANDING ASR: THE TRANSCRIPTION LAYER

This first layer of voice technology focuses entirely on one task: converting spoken words into written text. It is the foundational step that makes all subsequent analysis possible.

THE AUTOMATIC SPEECH RECOGNITION DEFINITION

The automatic speech recognition definition describes how technology processes audio to create a raw text transcript. Modern systems use sophisticated speech-to-text models to accomplish this. These speech-to-text models analyze sounds and map them to words. The automatic speech recognition definition is built on a history of innovation. Today, the best speech-to-text models rely on deep learning.

Key Architectures in Modern Speech-to-Text Models 🧠

- Deep Neural Networks (DNNs): Use multiple layers to learn complex sound patterns.

- Long Short-Term Memory (LSTM) Networks: Excel at understanding sequences, like spoken sentences.

- Transformer Models: Use self-attention mechanisms to understand context within speech, powering advanced speech-to-text models like OpenAI's Whisper.

The automatic speech recognition definition is the technical process, while speech to text is the practical outcome. This automatic speech recognition definition helps us understand the technology's core.

ASR VS. SPEECH TO TEXT: ARE THEY DIFFERENT?

For most business purposes, the terms are interchangeable. However, a technical distinction exists. ASR is the underlying technology, while speech to text often refers to the final, human-readable output. The automatic speech recognition definition covers the science of recognition.

| Feature | ASR (Automatic Speech Recognition) | Speech-to-Text (STT) |

|---|---|---|

| What it does | Converts speech into raw text data. | Produces human-readable text. |

| Use cases | Voice commands, live captions. | Transcription, dictation, meeting notes. |

| Output quality | May contain errors or miss punctuation. | More structured and readable text. |

Essentially, speech to text is the polished application of the core speech recognition technology.

THE CORE FUNCTION OF ASR

The primary function of any speech recognition system is accurate transcription. The industry measures this accuracy using a standard called Word Error Rate (WER). A lower WER indicates a better transcription. The process of converting voice to plain text faces challenges. Factors like background noise, speaker accents, and industry-specific jargon can impact the quality of the recognition. Advanced speech-to-text models are trained on diverse data to overcome these issues and improve the final transcription.

COMMON SPEECH-TO-TEXT APPLICATIONS

The use of speech to text technology is widespread and growing. Many industries benefit from these powerful tools. Common speech-to-text applications include:

- Voice Commands: Over 65% of new vehicles in Germany now include speech-enabled features for hands-free control.

- Clinical Documentation: Doctors use speech to text for dictating patient notes, which enables faster record-keeping.

- Call Center Operations: Businesses use real-time transcription to monitor calls for quality and compliance. This real-time transcription provides immediate insights.

- Meeting Productivity: Teams use speech to text for transcribing meetings, creating searchable records of discussions and action items.

UNLOCKING MEANING: THE NLP LAYER

If ASR is the "what," then Natural Language Processing (NLP) is the "why." After a speech to text system transcribes spoken words, NLP steps in to interpret their meaning. This technology gives computers the ability to read, understand, and derive value from human language. It is the intelligence layer that transforms a simple transcript into actionable insight.

DEFINING NATURAL LANGUAGE PROCESSING

Natural Language Processing is a field of artificial intelligence. It enables machines to understand the nuances of human language. The automatic speech recognition definition covers converting audio to words. NLP, however, goes much further. It analyzes the structure and meaning of those words to figure out what a person truly wants.

ANALYZING THE 'WHY': INTENT AND SENTIMENT

NLP excels at identifying two critical pieces of information: intent and sentiment.

- Intent Recognition identifies the customer's goal. An NLP model analyzes a query like "I want to cancel my subscription" to classify its purpose. The system identifies keywords like "cancel" and "subscription" to categorize the request as a 'cancellation request'.

- Sentiment Analysis detects the emotion behind the words. Models classify feelings into categories like positive, negative, neutral, or even urgent. This helps businesses understand if a customer is happy, angry, or just asking a simple question.

KEY NLP TASKS FOR CUSTOMER SERVICE

Several core NLP tasks are vital for modern customer service. These tasks help systems understand context with incredible precision.

Key NLP Tasks in Action ⚙️

- Named Entity Recognition (NER): This process identifies and extracts key information from text. It can pinpoint product names, dates, locations, and customer names from a service transcript.

- Topic Modeling: This technique automatically discovers recurring themes in thousands of conversations. It can group discussions into topics like "Late Delivery" or "Refund," helping businesses spot widespread issues.

HOW NLP POWERS INTELLIGENT AUTOMATION

NLP is the engine behind smart automation in the contact center. It uses the insights from intent, sentiment, and entity recognition to trigger automated workflows. For example, NLP can automatically route support tickets. A query about a payment goes directly to the billing department. An angry customer's ticket gets prioritized for immediate attention. This intelligent routing ensures customers get the right help, faster.

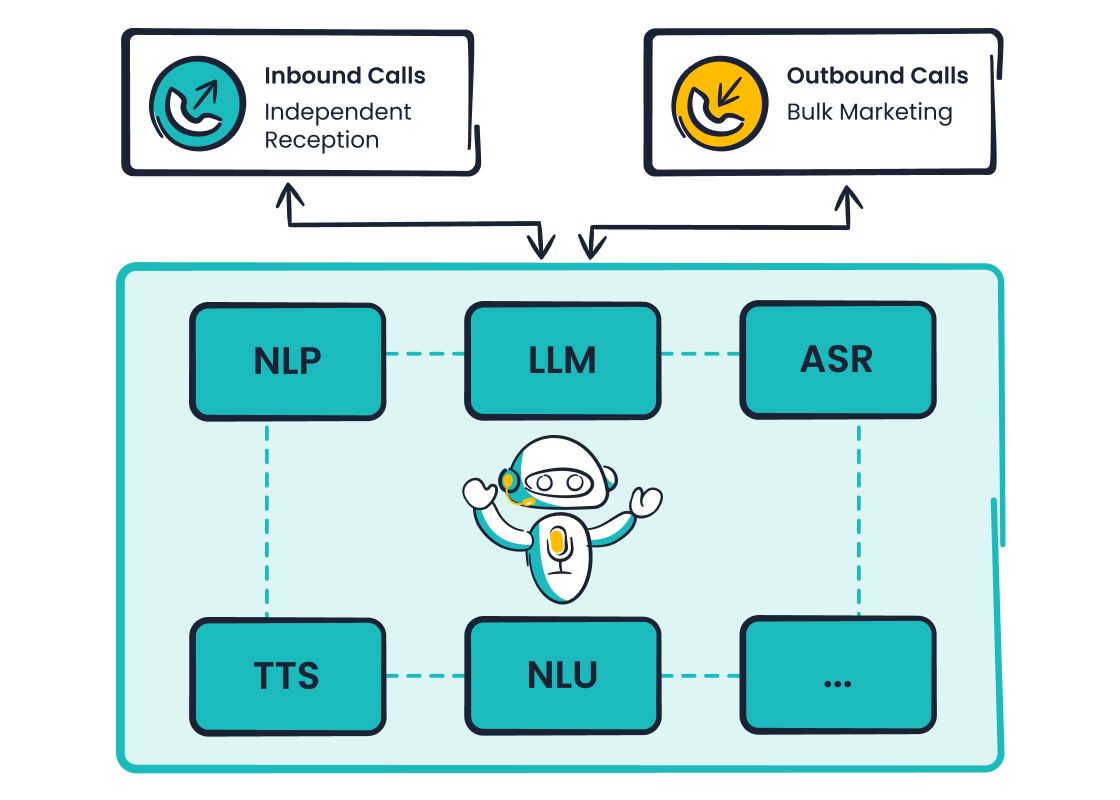

SOBOT VOICEBOT: ASR AND NLP IN ACTION

Theoretical concepts become powerful tools when put into practice. The Sobot Voicebot perfectly illustrates how ASR and NLP combine to create a seamless, intelligent customer experience. This process unfolds in a few clear steps, transforming a customer's spoken words into a resolved issue.

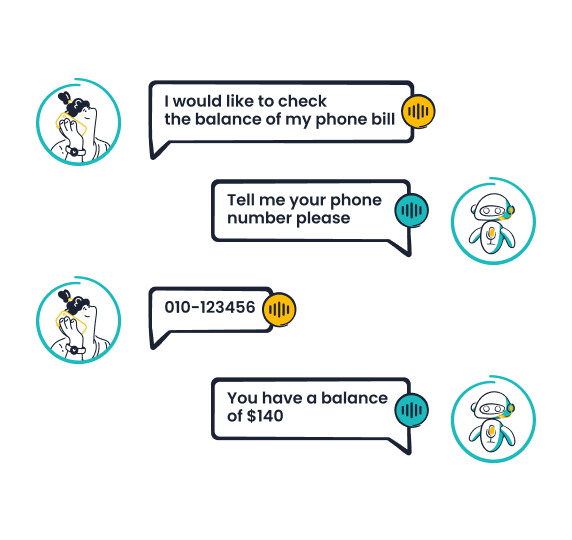

STEP 1: THE CUSTOMER SPEAKS (ASR)

The interaction begins when a customer calls. Imagine a customer saying, "Hi, I want to check my order status."

The Sobot Voicebot’s first task is to listen. Its advanced Automatic Speech Recognition (asr) technology immediately gets to work. The system captures the audio and performs a rapid speech to text conversion.

From Sound to Text 🗣️➡️📄

- Customer Speaks: "I want to check my order status."

- ASR Output (Transcription):

i want to check my order status

This initial transcription is the raw material for the next, more intelligent layer. The system now has a text-based version of the customer's request, completing the first critical step of the speech to text process.

STEP 2: THE SYSTEM UNDERSTANDS (NLP)

With a clean transcription, the Sobot Voicebot moves from hearing to understanding. This is where its powerful Natural Language Processing (NLP) and Large Language Model (LLM) technologies take over. The system does not just read the words; it analyzes them to uncover the customer's true goal.

Sobot employs Natural Language Understanding (NLU) to decipher user intent. It uses LLMs to map the text to high-dimensional spaces called embeddings. These embeddings represent semantic meaning, allowing the AI to grasp the subtleties of the request. Sobot integrates this advanced LLM technology with multiple internally trained AI models. This ensures the communication is accurate and responsive.

The system identifies the core intent from the speech to text output.

- Keywords Identified: "check," "order status"

- Intent Classified:

check_order_status

- Define Goals: The system is first taught to identify specific user goals, like checking an order or making a payment.

- Gather Data: It learns from diverse data, including thousands of real-life customer-agent conversations and audio recordings.

- Build Intents: Developers create intents (user goals) and supply various training phrases. For the

check_order_statusintent, phrases could include "Where is my stuff?" or "Can you tell me my order's location?" - Optimize and Iterate: The system's performance is constantly monitored. If a phrase is misunderstood, it is added to the correct intent's training vocabulary to improve future interactions.

STEP 3: THE SYSTEM RESPONDS (AI)

Once the Voicebot understands the customer's intent, it takes action. It uses this information to initiate a process or provide an answer. For a check_order_status request, the Voicebot needs access to order information.

Sobot's platform excels here due to its powerful integration capabilities. The Voicebot connects directly with backend business systems through APIs. These systems include:

- CRM platforms

- E-commerce platforms

- Ticketing systems

The Voicebot can query a company's internal CRM or order management system in real-time. It retrieves the relevant data and provides the customer with a direct, accurate response. For example, it might say, "Your order is currently out for delivery and is expected to arrive today by 5 PM." This automated fulfillment of the request, powered by the initial speech to text transcription, happens in seconds.

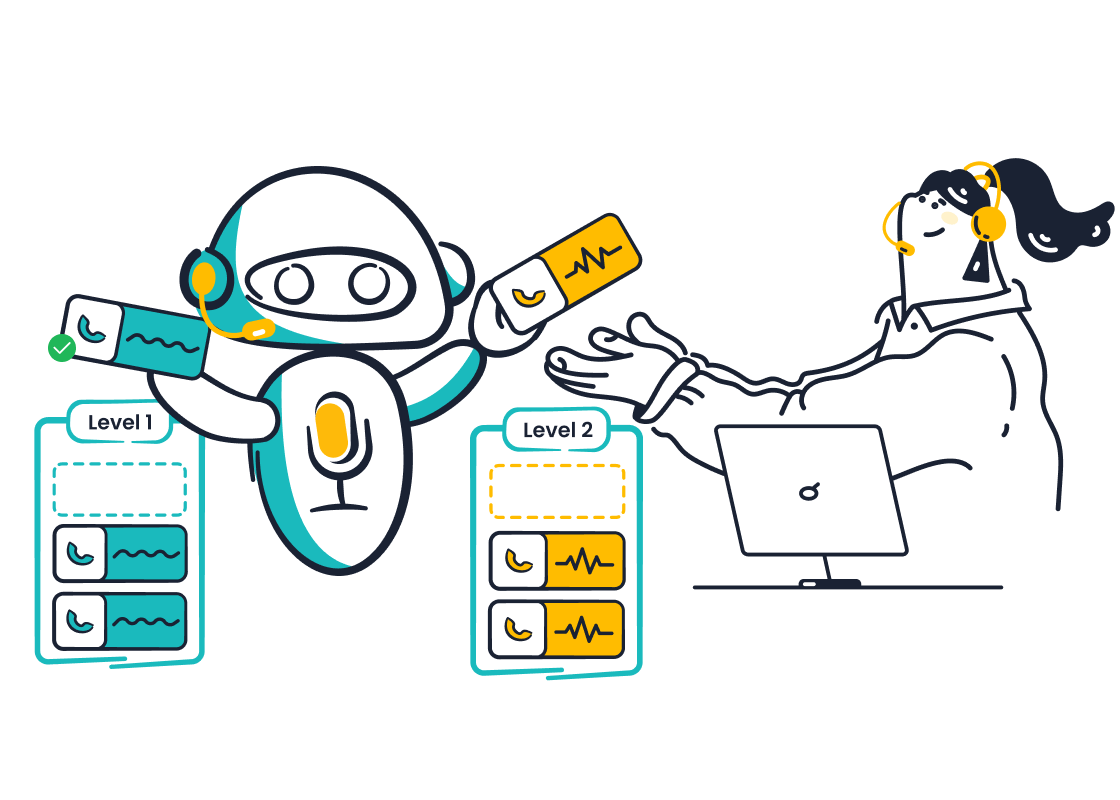

SEAMLESS HUMAN-AI COLLABORATION

Not every interaction can be fully automated. The Sobot Voicebot is designed to recognize its own limitations and the customer's emotional state. The NLP layer analyzes conversations for signs of high complexity, frustration, or negative sentiment.

If the system detects a complex issue it is not trained to handle, or if the customer says, "I need to speak to a person," it initiates a seamless handoff. The Voicebot automatically routes the call to the appropriate human agent. It also passes along the conversation history and the initial speech to text data. This ensures the agent has full context and can resolve the issue without asking the customer to repeat information. This intelligent collaboration combines the efficiency of AI with the empathy of human support.

BUSINESS IMPACT IN THE OMNICHANNEL CONTACT CENTER

Integrating ASR and NLP technologies within an omnichannel contact center creates significant business value. Sobot's Omnichannel Solution unifies these tools across all customer touchpoints. This synergy transforms customer service from a cost center into a powerful driver of growth and loyalty.

ENHANCING CUSTOMER SATISFACTION

Faster issue resolution directly leads to happier customers. When systems solve problems on the first interaction, customer satisfaction can increase by 25-30%. This principle is clear in the success of Sobot's clients.

Case Study: Weee! 🛒 America's largest online Asian supermarket, Weee!, implemented Sobot's solutions to streamline its customer service. The result was a remarkable 96% customer satisfaction score. You can read more about their story here.

By using ASR and NLP to quickly understand and route inquiries, businesses provide the immediate, effective support that modern customers expect.

BOOSTING AGENT EFFICIENCY

Automation handles repetitive tasks, freeing human agents to focus on complex issues. The combination of ASR transcription and NLP intent recognition gives agents the context they need before they even speak to a customer. This dramatically improves team performance.

The Weee! case study demonstrates this impact:

- Agent efficiency increased by 20%.

- Resolution time was reduced by 50%.

When agents spend less time on manual data entry and repetitive questions, they can resolve more issues and provide higher-quality support.

REDUCING OPERATIONAL COSTS

Automating customer interactions is a proven strategy for cost reduction. Studies show that implementing conversational AI can lower contact center costs by 30%. The Sobot Voicebot takes this even further. By automating common inquiries from start to finish, it delivers substantial savings.

The value is clear:

- Sobot's Voicebot can reduce the cost-per-contact by up to 80%.

This efficiency allows businesses to scale their support operations without a proportional increase in headcount, directly benefiting the bottom line.

UNLOCKING DATA-DRIVEN INSIGHTS

Every customer interaction is a source of valuable data. ASR transcribes calls, and NLP analyzes them for intent, sentiment, and key topics. Sobot's Omnichannel Solution gathers these insights from every channel into a single platform. This creates a treasure trove of business intelligence that helps companies:

- Identify recurring customer pain points.

- Discover opportunities for product improvement.

- Track agent and system effectiveness over time.

This data-driven approach enables continuous improvement across the entire organization.

The key difference is simple. Automatic Speech Recognition (asr) provides the initial speech to text transcription. Natural Language Processing understands what those words mean. The true power in modern customer service comes from integrating these technologies. A system needs accurate speech to text transcription to function. Solutions like Sobot's Voicebot show this value. They use speech to text to capture requests and NLP to solve them, turning speech to text into a seamless customer experience.

Ready to transform your customer interactions? Embark on Your Contact Journey by exploring intelligent, omnichannel solutions.

FAQ

What is the main difference between ASR and NLP?

Automatic Speech Recognition (ASR) technology converts spoken words into written text. This process is called transcription. Natural Language Processing (NLP) then analyzes that text to understand its meaning, intent, and sentiment. ASR hears the words, while NLP understands the goal behind them.

Why do voicebots need both speech to text and NLP?

A voicebot needs speech to text to transcribe a customer's spoken request. It then needs NLP to understand what the customer wants to do. Using both technologies allows a system like the Sobot Voicebot to provide accurate, intelligent responses instead of just transcribing words.

How accurate is modern speech recognition?

Modern speech recognition systems are highly accurate. Top-tier ASR models achieve accuracy rates over 95% in ideal conditions. Factors like background noise or strong accents can affect performance. However, advanced systems use AI to improve transcription quality continuously.

What role does AI play in ASR and NLP?

AI powers both ASR and NLP. In ASR, AI models learn to recognize sounds and convert them into text. In NLP, AI helps the system understand grammar, context, and user intent. This intelligence enables automated tools to have human-like conversations and solve complex problems.

See Also

Leading Speech Analytics Solutions for Call Centers: A 2024 Review

Automated Voice Calling Software: Best Platforms Reviewed for 2024

Essential Guide to Artificial Intelligence Software in Call Centers

Understanding the Functionality of IVR Voice Recognition Software

Leading AI Tools for Enterprise Contact Center Solutions: A Top 10