The Role of AI in Automatic Speech Recognition

AI has transformed Automatic Speech Recognition (ASR), making it a cornerstone of modern customer service. You can now experience faster and more efficient interactions, thanks to ASR tools that automate tasks and provide real-time transcription. These systems ensure 24/7 availability, reducing frustration by offering immediate assistance. Businesses report up to a 30% reduction in call handling costs, while personalized experiences improve customer satisfaction and loyalty.

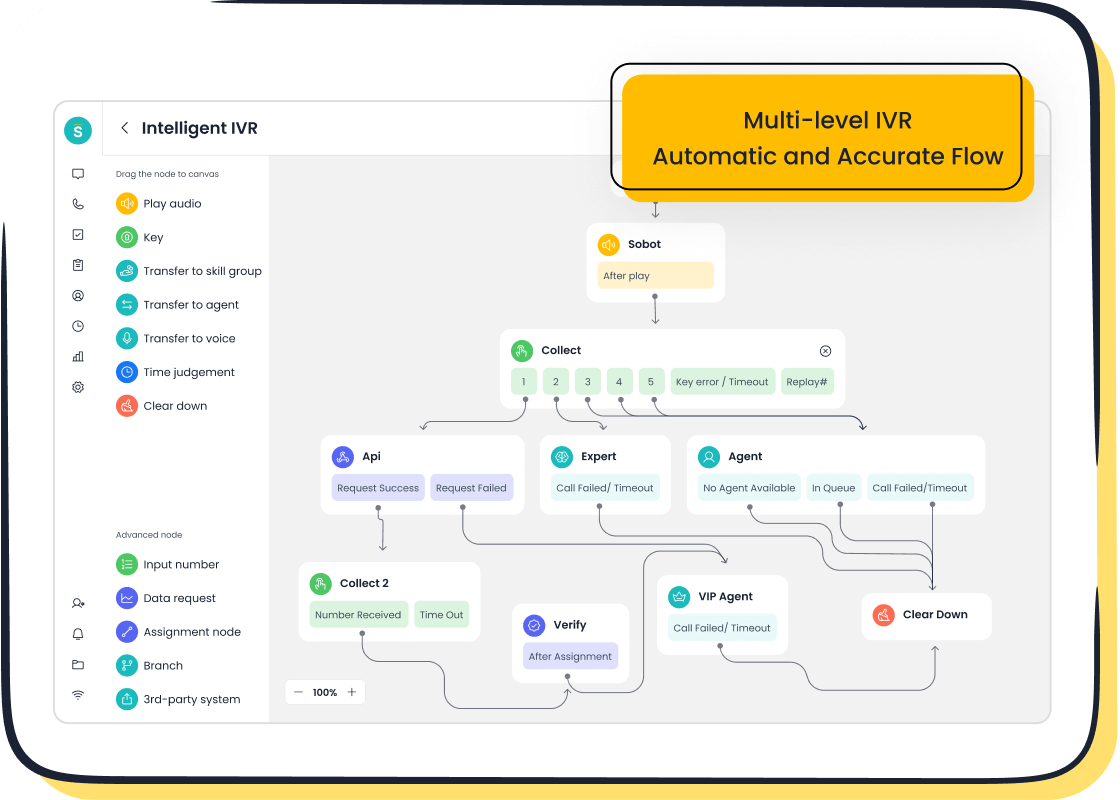

Sobot's Voice/Call Center exemplifies this transformation. Its AI-powered features, like intelligent IVR and multilingual support, streamline communication. For instance, companies like Weee! have achieved a 20% boost in agent efficiency and a 50% reduction in resolution time. With ASR, you can expect seamless, accurate, and adaptive customer interactions.

Understanding Automatic Speech Recognition (ASR) and AI

What is Automatic Speech Recognition?

Definition and purpose of ASR

Automatic speech recognition (ASR) refers to the technology that converts spoken language into written text. Its primary purpose is to enable machines to understand and process human speech, bridging the gap between humans and computers. ASR systems rely on advanced algorithms to interpret speech patterns and transform them into meaningful text. This capability has revolutionized how businesses and individuals interact with technology, making communication faster and more efficient.

Applications in customer service and call centers

ASR plays a vital role in customer service, especially in call centers. It automates tasks like call routing, transcription, and data entry, reducing the workload for agents. For example, Sobot's Voice/Call Center uses ASR to provide real-time transcription and intelligent IVR systems. These features allow businesses to handle high call volumes efficiently while maintaining personalized customer interactions. Companies like Weee! have leveraged ASR to overcome language barriers and improve resolution times, achieving a 96% customer satisfaction score.

How AI Enhances ASR

AI's role in speech-to-text conversion

AI has significantly improved ASR by enhancing its speech-to-text capabilities. Traditional systems struggled with complex speech patterns, but AI-powered ASR systems excel in understanding accents, dialects, and noisy environments. Deep learning models, such as those used in Sobot's AI-powered Voicebot, process speech data with remarkable accuracy. These models analyze acoustic signals, predict word sequences, and deliver precise transcriptions, even in challenging scenarios.

Importance of data and algorithms in ASR systems

Data and algorithms form the backbone of ASR systems. Core components like acoustic modeling, language modeling, and feature extraction rely on vast datasets to train and adapt. Advanced algorithms, including convolutional and recurrent neural networks, enhance ASR's ability to recognize speech patterns and context. For instance, end-to-end ASR systems use deep learning to map acoustic features directly to text, streamlining the process and improving accuracy. These innovations make ASR indispensable for businesses aiming to optimize customer communication.

Key Machine Learning Paradigms in ASR

Supervised Learning in ASR

Training ASR models with labeled speech data

Supervised learning plays a crucial role in training ASR systems. You provide labeled speech data, where each audio file has a corresponding transcript, to teach the system how to map spoken words to text. This process involves discriminative learning techniques that optimize the model's ability to distinguish between different speech patterns. For example, Sobot's Voice/Call Center uses supervised learning to enhance its AI-powered Voicebot, ensuring accurate transcription and seamless customer interactions. By leveraging labeled datasets, ASR systems achieve high accuracy in recognizing speech, even in complex scenarios.

Examples of supervised learning in ASR applications

Supervised learning powers many real-world ASR applications:

- Virtual Assistants: Siri, Alexa, and Google Assistant rely on ASR for voice command recognition.

- Transcription Services: ASR converts audio into text, aiding accessibility and content creation.

- Customer Service and Support: ASR enhances IVR systems for efficient customer interactions.

- Language Learning and Education: Apps like Duolingo use ASR for pronunciation evaluation and automated captions.

Unsupervised Learning in ASR

Leveraging unlabeled data for speech recognition

Unsupervised learning allows ASR systems to utilize unlabeled data, which is abundant and cost-effective. Self-supervised learning techniques, a subset of unsupervised learning, improve model accuracy by extracting patterns from raw audio. These methods enable ASR systems to generalize across accents, dialects, and languages. For instance, Sobot's ASR solutions benefit from these techniques to adapt to diverse customer needs, ensuring reliable performance in multilingual environments.

Benefits and limitations of unsupervised approaches

Unsupervised learning offers several advantages:

- It reduces the dependency on expensive labeled datasets.

- It enhances the system's ability to handle linguistic diversity.

- It improves the generalization of ASR systems across various scenarios.

However, challenges include potential inaccuracies in pseudo-labels and the complexity of self-supervised methods. Despite these limitations, unsupervised learning remains a valuable tool for advancing ASR technology.

Deep Learning and Neural Networks

Role of deep learning in feature extraction and pattern recognition

Deep learning has revolutionized ASR by enabling advanced feature extraction and pattern recognition. You can think of it as the backbone of modern ASR systems. Techniques like discriminative learning allow models to identify subtle differences in speech signals, improving transcription accuracy. Deep learning also facilitates the integration of ASR with other AI technologies, such as natural language processing, for enhanced functionality.

Popular architectures like RNNs, LSTMs, and Transformers

Several neural network architectures drive ASR systems:

- Recurrent Neural Networks (RNNs): Process sequential data, making them ideal for speech recognition.

- Long Short-Term Memory (LSTMs): Address long-term dependencies in speech by managing information flow effectively.

- Transformer Models: Excel in parallel processing, enabling faster and more accurate transcription.

These architectures, combined with Sobot's robust ASR solutions, ensure reliable and scalable performance for businesses worldwide.

Challenges in ASR Development

Handling Diverse Accents and Dialects

The impact of linguistic diversity on ASR performance

You may have noticed that ASR systems sometimes struggle with accents or dialects. Variations in pronunciation often lead to misrecognition of words. Differences in intonation can confuse how speech is interpreted. Distinct speech patterns across accents significantly reduce recognition accuracy. For instance, an ASR system trained on American English may find it challenging to understand British or Indian accents. These challenges highlight the importance of adapting ASR systems to linguistic diversity.

AI solutions for accent adaptation

AI has made significant strides in addressing accent-related challenges. Multi-accent training, where models learn from diverse datasets, enhances generalization across accents. Transfer learning allows systems to fine-tune pre-trained models on smaller, accent-specific datasets. Additionally, adapting language models to include accent-specific vocabulary improves transcription accuracy. Sobot’s Voice/Call Center leverages these techniques to ensure reliable performance, even in multilingual environments.

Background Noise and Environmental Factors

Challenges of noisy environments in speech recognition

Background noise disrupts ASR systems by interfering with speech clarity. Poor audio quality further complicates transcription, reducing accuracy. For example, a customer calling from a busy street may experience misinterpretation of their words. This issue is particularly critical in real-world applications like customer service, where clear communication is essential.

Techniques like noise reduction and signal processing

Noise reduction algorithms play a vital role in improving ASR performance. Spectral subtraction removes unwanted frequencies, while Wiener filtering reduces noise volume without affecting speech. Adaptive filtering dynamically adjusts to changing noise conditions. These techniques, combined with AI advancements, enable systems like Sobot’s Voice/Call Center to deliver accurate transcription even in noisy environments.

Multilingual and Code-Switching Scenarios

Difficulties in recognizing multiple languages or mixed speech

Multilingual ASR faces unique challenges. Code-switching, where speakers mix languages mid-sentence, complicates recognition. Limited training data for less common languages further hinders accuracy. For example, recognizing a mix of English and Hindi requires specialized models trained on code-switched datasets.

AI-driven approaches to multilingual ASR

AI-driven solutions address these challenges effectively. Acoustic models analyze unique audio patterns, while language models predict word sequences to understand context. Pronunciation dictionaries map how words sound in different languages. Sobot’s multilingual ASR capabilities utilize these techniques, ensuring seamless communication across languages.

Recent Advancements and Future Potential in ASR

Breakthroughs in AI for ASR

Use of pre-trained models like Whisper and Wav2Vec

Pre-trained models like Whisper and Wav2Vec have revolutionized ASR technology. Whisper, trained on 680,000 hours of multilingual speech data, surpasses earlier models in scale and diversity. Its architecture combines 2D CNNs and transformer encoders, enabling it to handle tasks like ASR and translation. Unlike traditional models, Whisper adds punctuation and capitalization, improving transcription readability. Similarly, Wav2Vec excels in zero-shot settings, making it ideal for multilingual applications. These advancements enhance ASR systems' accuracy and adaptability, ensuring reliable performance across diverse scenarios.

Improvements in real-time transcription and accuracy

AI-driven innovations have significantly improved real-time transcription. Conformer and Transformer architectures now dominate ASR systems, with Conformer excelling in streaming applications. These models process speech faster and more accurately, even in noisy environments. Additionally, new metrics align better with human perception, addressing semantic issues in word error rates. These breakthroughs ensure ASR tools deliver precise and efficient transcription, transforming industries like customer service and education.

AI-Powered ASR in Customer Service

Enhancing customer interactions with ASR-powered tools

AI-powered ASR tools elevate customer interactions by providing 24/7 support and real-time transcription. These tools capture customer concerns accurately, enabling faster and more relevant responses. For example, Sobot’s Voice/Call Center uses intelligent IVR and multilingual support to personalize experiences. By analyzing past interactions, ASR tools create tailored responses, improving customer satisfaction and loyalty.

Automating call center operations with Sobot's Voice/Call Center

Sobot’s Voice/Call Center automates repetitive tasks, reducing operational costs and boosting efficiency. Features like smart call routing and AI-powered Voicebot streamline workflows, allowing agents to focus on complex issues. Real-time transcription ensures accurate communication, even in noisy environments. Businesses like Weee! have achieved a 20% increase in agent efficiency and a 50% reduction in resolution time using Sobot’s solutions.

Future Trends in ASR

Personalized ASR systems for individual users

Future ASR systems will adapt to individual users by learning from real-world interactions. These systems will recognize regional dialects, emotions, and unique speech patterns, enhancing personalization. For example, they could adjust to your accent or vocabulary over time, ensuring seamless communication. Continuous learning will enable ASR tools to stay relevant, offering richer outputs and improved accuracy.

Integration of ASR with other AI technologies like NLP

ASR will increasingly integrate with AI technologies like natural language processing (NLP). This combination will enable advanced applications such as speech-to-speech translation and multimodal interactions. For instance, future ASR systems could process both text and audio inputs, delivering synthesized audio or text outputs. These innovations will expand ASR’s capabilities, making it indispensable in fields like healthcare, education, and customer service.

The Transformative Impact of AI on ASR

Revolutionizing Customer Service with Sobot

Reducing response times and improving customer satisfaction

AI-powered ASR systems have transformed customer service by significantly reducing response times. Customers no longer need to wait on hold for extended periods, which can sometimes last up to 85 minutes. With ASR tools, you can interact with voice technology to get quick answers, even resolving issues independently without speaking to a human agent. This allows businesses to handle more inquiries efficiently.

ASR systems also streamline call center operations. Calls are automatically categorized based on customer input, ensuring they are routed to the correct department. This reduces human errors and enhances workflow accuracy. AI agents process speech in real time, delivering instant replies that improve customer satisfaction. For example, Sobot’s Voice/Call Center uses smart call routing and real-time transcription to ensure faster resolutions. Businesses like Weee! have seen a 50% reduction in resolution time and a 20% boost in agent efficiency, demonstrating the transformative impact of ASR on customer service.

Enabling 24/7 support with AI-powered ASR tools

ASR systems provide round-the-clock support, ensuring your customers always receive assistance when needed. This availability enhances customer satisfaction and loyalty. Sobot’s Voice/Call Center, for instance, offers intelligent IVR and AI-powered Voicebot features that automate repetitive tasks, allowing agents to focus on complex issues.

| Key Benefit | Description |

|---|---|

| 24/7 Support Availability | ASR systems provide continuous support, improving customer satisfaction and loyalty. |

| Real-time transcription and Accuracy | Instant processing of spoken language leads to faster and more relevant responses. |

| Cost Reduction through Automation | Automating tasks reduces operational costs significantly, enhancing profitability. |

| Personalized Customer Experiences | AI agents use past interactions to tailor responses, fostering customer loyalty and engagement. |

These tools not only reduce operational costs but also create personalized experiences by analyzing past interactions. This fosters stronger customer relationships and ensures your business remains competitive in today’s fast-paced environment.

Expanding Accessibility

Helping individuals with disabilities through speech recognition

ASR technology plays a vital role in improving accessibility for individuals with disabilities. It enhances communication by converting speech into machine-readable language, making digital platforms more inclusive. You can use voice commands to control devices, access information, and interact with technology independently. This promotes greater autonomy and improves quality of life.

For individuals with limited mobility or visual impairments, ASR tools provide an essential bridge to the digital world. They foster inclusivity by enabling full participation in digital environments. Sobot’s ASR solutions, with their advanced speech-to-text capabilities, ensure that everyone can benefit from seamless communication.

Bridging communication gaps in multilingual settings

ASR systems excel in multilingual environments, helping you overcome language barriers. They support a wide range of languages, ensuring effective communication across diverse audiences. Companies can expand their global reach by connecting with new markets and fostering cultural inclusivity.

For example, Sobot’s multilingual ASR capabilities allow businesses to serve customers in multiple languages effortlessly. By analyzing unique audio patterns and predicting word sequences, these systems deliver accurate transcriptions, even in code-switching scenarios. This ensures that your business can communicate effectively, no matter the language or region.

AI and machine learning have transformed ASR into a vital tool for modern businesses. Deep learning technologies now enable ASR systems to handle noisy environments and diverse accents with remarkable accuracy. End-to-end models process complex speech patterns more effectively, making ASR indispensable in customer service. For example, Sobot’s Voice/Call Center uses AI to automate tasks, reduce costs, and improve customer satisfaction.

By 2030, ASR systems will become more personalized and accessible. They will adapt to new languages and challenging environments, helping businesses connect with diverse audiences. Innovations like speech enhancement-ASR-diarization systems will make ASR tools reliable and inclusive. Sobot continues to lead this evolution, ensuring businesses benefit from cutting-edge ASR solutions.

With AI-powered ASR, you can expect faster, more accurate, and personalized interactions, revolutionizing how businesses communicate with their customers.

FAQ

What industries benefit the most from ASR technology?

ASR technology benefits industries like customer service, healthcare, education, and retail. For example, call centers use ASR to automate call routing and transcription. Retailers like Weee! leverage ASR products to overcome language barriers, improving customer satisfaction by 96%. These tools streamline operations and enhance user experiences across sectors.

How does ASR handle noisy environments?

ASR systems use advanced noise reduction techniques like spectral subtraction and adaptive filtering. These methods improve transcription accuracy in challenging environments. Sobot’s pioneering ASR technology ensures reliable performance, even in noisy settings like busy streets or crowded offices, making it ideal for real-world applications.

Can ASR systems recognize multiple languages?

Yes, modern ASR systems support multilingual capabilities. They analyze unique audio patterns and predict word sequences for accurate transcription. Sobot’s ASR products excel in multilingual environments, helping businesses communicate effectively across diverse audiences and even handle code-switching scenarios seamlessly.

What makes AI-powered ASR different from traditional systems?

AI-powered ASR uses deep learning models to process speech with higher accuracy. Unlike traditional systems, it adapts to accents, dialects, and noisy conditions. Sobot’s pioneering ASR technology integrates features like intelligent IVR and real-time transcription, ensuring faster and more personalized customer interactions.

How does ASR improve customer service?

ASR automates repetitive tasks like call routing and transcription, reducing response times. It also enables 24/7 support and personalized interactions. Sobot’s Voice/Call Center, for instance, helped Weee! achieve a 20% boost in agent efficiency and a 50% reduction in resolution time, transforming customer service operations.

See Also

Understanding The Functionality Of IVR Voice Recognition Software

A Comprehensive Overview Of AI Software For Call Centers

Evaluating AI Solutions For Enterprise Call Centers

Best 10 AI Tools For Enterprise Contact Center Solutions

Comparative Analysis Of Leading Interactive Voice Response Software