With the rapid advancement of AI technologies, AI voicebots are becoming an integral part of our daily lives. From customer service to personal assistants, these intelligent systems are designed to understand and respond to human speech. But many users wonder: does a voicebot learn my pronunciation? Let’s explore how AI voicebots handle speech, adapt to users, and what this means for the future of voice interaction.

Understanding AI Voicebots

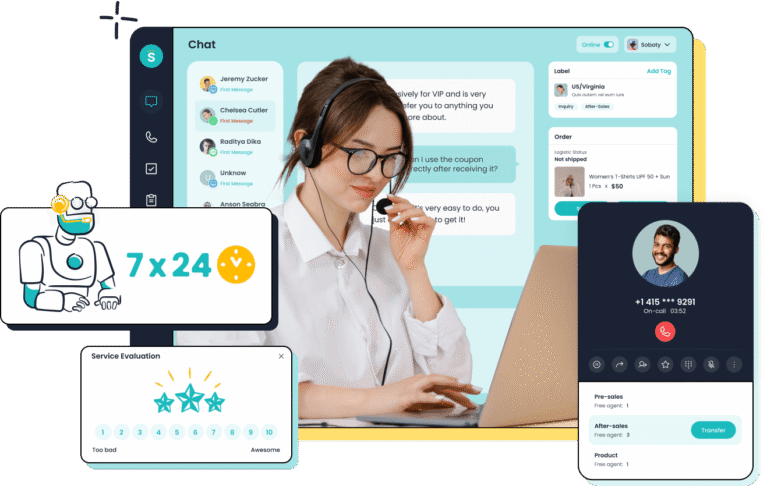

An AI voicebot is an artificial intelligence system that interacts with humans through spoken language. Unlike traditional chatbots that rely on text, voicebots use speech recognition, natural language processing (NLP), and sometimes text-to-speech technology to interpret and respond to voice commands.

These systems are designed to process a wide range of accents, speech patterns, and pronunciations. Their goal is not only to understand words but also to interpret intent, tone, and context, enabling more natural and efficient conversations.

How AI Voicebots Handle Pronunciation

Modern AI voicebots use machine learning to recognize and adapt to different speech patterns. When you speak to a voicebot, it analyzes your pronunciation, intonation, and word choice. Over time, the voicebot can improve its understanding of your unique way of speaking.

For example, if you have a regional accent or pronounce certain words differently, the AI voicebot can learn to interpret these variations correctly. This personalized adaptation helps reduce errors, improve response accuracy, and enhance the overall user experience.

Benefits of a Voicebot Learning Your Pronunciation

- Improved Accuracy

By learning individual pronunciations, AI voicebots can respond more accurately to commands. This reduces frustration and makes interactions smoother.

- Personalized Experience

Voicebots that adapt to your speech create a more personal and natural interaction. The system feels less like a rigid machine and more like a responsive assistant that understands you.

- Accessibility

For users with speech impediments, strong accents, or non-native language skills, AI voicebots that learn pronunciation make technology more accessible and inclusive.

Challenges and Limitations

While AI voicebots are powerful, they are not perfect. Learning pronunciation requires sufficient interaction and data. Rare words, complex sentences, or heavy accents may still pose challenges. Additionally, privacy concerns arise when voice data is stored for machine learning purposes. Users should ensure that the AI platform follows strict security and privacy guidelines.

The Future of Pronunciation-Adaptive Voicebots

The future of AI voicebots looks promising. As speech recognition algorithms and NLP continue to improve, voicebots will become even better at understanding and adapting to individual users. This could lead to highly personalized AI assistants capable of handling complex conversations in multiple languages and dialects.

Conclusion

Yes, modern AI voicebots can learn your pronunciation, adapting to your speech patterns to improve accuracy, accessibility, and user experience. While challenges remain, the combination of advanced machine learning and natural language processing positions AI voicebots as a key technology in personalized voice interaction. As they continue to evolve, users can expect smarter, more responsive voicebots that truly understand how they speak.